Signs Taken for Wonders: AI, Art & the Matter of Race

AI shares with earlier socially transformative technologies a reliance on limiting models of the “human” that embed racialized metrics for human achievement, expression, and progress. Many of these fundamental mindsets about what constitutes humanity have become institutionally codified, continuing to mushroom in design practices and research development of devices, applications, and platforms despite the best efforts of many well-intentioned technologists, scholars, policy-makers, and industries. This essay argues why and how AI needs to be much more deeply integrated with the humanities and arts in order to contribute to human flourishing, particularly with regard to social justice. Informed by decolonial, disability, and gender critical frameworks, some AI artist-technologists of color challenge commercial imperatives of “personalization” and “frictionlessness,” representing race, ethnicity, and gender not as normative self-evident categories nor monetized data points, but as dynamic social processes always indexing political tensions and interests.

This page was updated on May 3, 2022. To view the original essay published on April 13, 2022, click here.

As he grew accustomed to the great gallery of machines, he began to feel the forty-foot dynamos as a moral force, much as the early Christians felt the Cross. The planet itself felt less impressive, in its old-fashioned, deliberate, annual or daily revolution, than this huge wheel, revolving within arm’s length at some vertiginous speed, and barely murmuring–scarcely–humming an audible warning to stand a hair’s breadth further for respect of power, while it would not wake the baby lying close against its frame. Before the end, one began to pray to it; inherited instinct taught the natural expression of man before silent and infinite force. Among the thousand symbols of ultimate energy the dynamo was not so human as some, but it was the most expressive.

—Henry Adams, “The Virgin and the Dynamo”1

In astonishment of the new technologies at the turn into the twentieth century, the renowned historian Henry Adams found the Gallery of the Electric Machines “physics stark mad in metaphysics” and wondered at their profound hold on the cultural imagination.2 The dynamo that so moved and unsettled Adams was a new generator of unprecedented scale, a machine responsible for powering the first electrified world’s fair in 1893, a purportedly spectacular event presided over by President Glover Cleveland. Its power was invisible but the more potent for it: “No more relation could he discover between the steam and the electric current than between the cross and the cathedral. The forces were interchangeable if not reversible, but he could see only an absolute fiat in electricity as in faith.” For Adams, the dynamo’s effect in the world was akin to evidence of things unseen like the symbols of the Virgin or the cross, imperceptible but world-transforming currents with implications both worldly and spiritual.

I open with this discussion of the world’s fair at the fin de siècle because Adams’s dynamo is our GPT-3 (Generative Pre-trained Transformer 3), a language model that uses deep learning to produce text/speech/responses that can appear generated by a human. His exhilaration–hand-in-glove with his existential vertigo–and his internal conflict similarly speak to our contemporary aspirations for and anxieties about artificial intelligence. Adams understood that the turn to such formidable technology represented a thrilling but cataclysmic event, “his historical neck broken by the sudden irruption of forces entirely new.” Although human grappling with exponential leaps in technology dates at least to the medieval period, this particular historical precedent of a transformational moment is singularly relevant for our contemporary moment: there’s a direct line between Adams’s concern with the hagiography of tech, the devaluation of the arts and humanities, and the comingling of scientific development with (racialized, ableist) narratives of progress to current debates about those nearly identical phenomena today. The consequences of those fundamental mindsets and practices, institutionally codified over time, continue to mushroom in devices, applications, platforms, design practices, and research development. Unacknowledged or misunderstood, they will continue to persist despite the best efforts of many well-intentioned technologists, scholars, policy-makers, and industries that still tend to frame and limit questions of fairness and bias in terms of “safety,” which can mute or obscure attention to issues of equity, justice, or power.3

Significantly, Adams’s response to the dynamo is neither apocalyptic jeremiad nor in the genre of salvation: that is, his concerns fell beyond the pale of narratives of dystopia or deliverance. He was no technophobe; in fact, he deeply admired scientific advances of all kinds. Rather, his ambivalence has to do with the inestimable psychological and spiritual sway of machines so impressive that “the planet itself felt less impressive,” even “old-fashioned.”4 That something man-made might seem so glorious as to overshadow creation, seemed so evocative of the infinite that people felt out of step with their own times. For Adams, those experiences signaled an epistemic break that rendered people especially receptive and open to change, but also vulnerable to idolizing false gods of a sort. He saw that the dynamo was quickly acquiring a kind of cult status, inviting supplication and reverence by its followers. The latest technology, as he personified it in his poem “Prayer to the Dynamo,” was simultaneously a “Mysterious Power! Gentle Friend! Despotic Master! Tireless Force!”5 Adams experienced awe in the presence of the dynamo: “awe” as the eighteenth-century philosopher Edmund Burke meant the term, as being overcome by the terror and beauty of the sublime. And being tech awestruck, he also instantly presaged many of his generation’s–and I would argue, our generation’s–genuflection before it.

As part of his concern that sophisticated technology inspires a kind of secular idolatry, Adams also noted its increasing dominance as the hallmark of human progress. In particular, he presciently anticipated that it might erode the power of both religion and the arts as vehicles for and markers of humanity’s higher strivings. Indeed, his experience at the Gallery taught him firsthand how fascination with such potent technology could eclipse appreciation of the arts: more specifically, of technological innovation replacing other modes of creative expression as the pinnacle of human achievement. Adams bemoaned the fact that his friend, Langley, who joined him at the exposition, “threw out of the field every exhibit that did not reveal a new application of force, and naturally, to begin with, the whole art exhibit.” The progress of which technology increasingly claimed to be the yardstick extended beyond the valuation of art also extended to racial, ethnic, and gender scales. Most contemporary technological development, design, and impact continue to rely unquestioningly on enlightenment models of the “human,” as well as the nearly unchanged and equally problematic metrics for human achievement, expression, and progress.

These are not rhetorical analogies; they are antecedences to AI, historical continuities that may appear obscured because the tech-ecosystem tends to eschew history altogether: discourses about AI always situate it as future-facing, prospective not retrospective. It is an idiom distinguished by incantations about growth, speed, and panoptic capture. The messy, recursive, complex narratives, events, and experiences that actually make up histories are reduced to static data points necessary in training sets for predictive algorithms. Adams’s reaction offers an alternative framing of time in contrast to marketing imperatives that fetishize the next new thing, which by definition sheds its history.

This reframing is important to note because for all the contemporary talk of disruption as the vaulted and radical mode of innovation, current discourse still often presents so-called disruptive technologies as a step in an inexorable advance forward and upward. In that sense, tech disruption is in perfect keeping with the same teleological concept of momentum and progress that formed the foundational basis by which world’s fairs ranked not only modes of human achievement but also degrees of “human.” The exhibitions catalogued not just inventions but people, classifying people by emerging racialized typologies on a hierarchical scale of progress with the clear implication that some were more human than others.6 This scale was made vivid and visceral: whether it was the tableaux vivant “ethnic villages” of the 1893 world’s fair in Chicago’s “White City” or the 1900 Paris showcase of African American achievement in the arts, humanities, and industry (images of “racial uplift” meant to counter stereotyping), both recognized how powerfully influential were representations of races’ putative progress–or lack of it.

Carrying the international imprimatur of the fairs, the exhibitions were acts of racial formation, naturalizing rungs of humanness and, indeed, universalizing the imbrication of race and progress. Billed as a glimpse into the future, the fairs simultaneously defined what was not part of modernity: what or who was irrelevant, backward, regressive in relation. Technological progress, therefore, was not simply represented alongside what (arts/humanities) or who (non-whites) were considered less progressive; progress was necessarily measured against both, indeed constituted by its difference and distance from both.

For critical theorist Homi Bhabha, such notions of progress, and the technology and symbol of it, are inextricably tied to the exercise of colonial and cultural power. His essay “Signs Taken for Wonders: Questions of Ambivalence and Authority Under a Tree outside Delhi, May 1817” critiques the “wondrous” presence of the book, itself a socially transformative technology, by beginning with the premise that innovation cannot be uncoupled from the prerogatives of those who have the power to shape realities with it:

The discovery of the book is, at once, a moment of originality and authority, as well as a process of displacement, that paradoxically makes the presence of the book wondrous to the extent to which it is repeated, translated, misread, displaced. It is with the emblem of the English book–“signs taken as wonders”–as an insignia of colonial authority and an insignia of colonial desire and discipline that I begin this essay.7

Adams spoke of awe in the presence of the dynamo. Bhabha goes further in challenging such “signs taken as wonders,” in questioning technologies so valorized that they engender awe, obeyance, and reverence as if such a response was natural, innocent of invested political and economic interests, free of market value systems.

Like all tools, AI challenges the notion that the skull marks the border of the mind. . . . New tools breed new literacies, which can engender nascent forms of knowing, feeling and telling.

—Vanessa Chang, “Prosthetic Memories, Writing Machines”8

Art sits at the intersection of technology, representation, and influence. Literature, film, music, media, and visual and graphic arts are all crucial incubators for how publics perceive tech. Storytelling impacts, implicitly or explicitly, everything from product design to public policy. Many of these narratives bear traces of literature’s earliest engagement with technology, at least since medieval times, and others–either engaged with AI or AI-enabled–are also offering new plotlines, tropes, identity formations, historiographies, and speculative futurities. Moreover, because cultural storytelling helps shape the civic imagination, it can, in turn, animate political engagement and cultural change.9

Indeed, the arts are specially poised to examine issues in technological spaces (from industry to STEM education) of equity, diversity, social justice, and power more capaciously and cogently than the sometimes reductive industry-speak of inclusion, fairness, or safety (usually simply meaning minimization of harm or death–a low bar indeed). Even before GPT-3, powerful natural language processing was enabling explorations in AI-assisted poetry, AI-generated filmscripts, AI-informed musicals, AI-advised symphonies, AI-curated art histories, and AI-augmented music.10 Many are proposing new nomenclature for hybrid genres of art, design, and tech, and fresh subfields are blooming in both academe and entertainment.11 And during the COVID-19 pandemic and intensified movements for social justice, there has been a plethora of virtual exhibitions and articles about the hot debates over the status, meaning, and valuation of AI-generated or -augmented art.12

Amidst this explosion of artistic engagement with AI, social and political AI scholars Kate Crawford and Luke Stark, in “The Work of Art in the Age of Artificial Intelligence: What Artists Can Teach Us about the Ethic of Data Practice,” offer a not uncommon perspective on the need for interdisciplinary collaboration: “Rather than being sidelined in the debates about ethics in artificial intelligence and data practices more broadly, artists should be centered as practitioners who are already seeking to make public the political and cultural tensions in using data platforms to reflect on our social world.”13 However, they also close the article by recommending that arts practitioners and scholars would do well with more technical education and that without it, their engagements and critiques will have lesser insight into and standing regarding the ethics of data practice: “One barrier to a shared and nuanced understanding of the ethical issues raised by digital art practices is a lack of literacy regarding the technologies themselves Until art critics engage more deeply with the technical frameworks of data art, their ability to analyze and assess the merits of these works–and their attendant ethical dilemmas–may be limited.” They continued: “a close relationship to computer science seemed to offer some artists a clearer lens through which to consider the ethics of their work.”14

Certainly, continuing education is usually all to the good. But I would welcome the equivalent suggestion that those in data science, computer science, engineering, and technology, in turn, should continue to educate themselves about aesthetics and arts practices–including at least a passing familiarity with feminist, queer, decolonial, disability, and race studies approaches to AI often central to those practices–to better understand ethical debates in their respective fields.15 Without that balance, the suggestion that artists and nontechnical laypeople are the ones who primarily need education, that they require technical training and credentialing in order to have a valid(ated) understanding of and legitimate say in the political, ethical, social, and economic discussions about AI, is a kind of subtle gatekeeping that is one of the many often unacknowledged barriers to cross-disciplinary communication and collaboration. Given the differential status of the arts in relation to technology today, it is usually taken for granted that artists (not technologists, who presumably are doing more important and time-consuming work in and for the world) have the leisure and means not only to gain additional training in other fields but also to do the hard translational work necessary to integrate those other often very different disciplinary practices, vocabularies, and mindsets to their own creative work. That skewed status impacts who gains the funding, influence, and means to shape the world.

Instead of asking artists to adapt to the world models and pedagogies informing technological training–which, as with any education, is not simply the neutral acquisition of skills but an inculcation to very particular ways of thinking and doing–industry might do well to adapt to the broader vernacular cultural practices and techne of marginalized Black, Latinx, and Indigenous communities. Doing so might shift conversation in the tech industry from simply mitigating harm or liability from the differentially negative impact of technologies on these communities. Rather, it would require a mindset in which they are recognized as equal partners, cultural producers of knowledge(s), as the longtime makers, not just the recipients and consumers, of technologies.16 In fact, artist-technologist Amelia Winger-Bearskin, who is Haudenosaunee (Iroquois) of the Seneca-Cayuga Nation of Oklahoma, Deer Clan, makes a case that many of these vernacular, often generational, practices and values are what she calls “antecedent technologies,” motivated by an ethic that any innovation should honor its debt to those seven generations prior and pay it forward seven generations.17

In this way, many contemporary artist-technologists engage issues including, but also going beyond, ethics to explore higher-order questions about creativity and humanity. Some offer non-Western or Indigenous epistemologies, cosmologies, and theologies that insist on rethinking commonly accepted paradigms about what it means to be human and what ways of doing business emerge from that. Perhaps most profoundly, then, the arts can offer different, capacious ways of knowing, seeing, and experiencing worlds that nourish well-being in the now and for the future. It is a reminder of and invitation to world models and frameworks alternative to what can seem at times to be dominating or totalizing technological visions. In fact, one of the most oft-cited criticisms of AI discourse, design, and application concerns its univision, its implied omniscience, what scholar Alison Adams calls “the view from nowhere.” It is challenged by art that offers simultaneous, multiple, specifically situated, and sometimes competing points of view and angles of vision that enlarge the aperture of understanding.18

For instance, informed by disability culture, AI-augmented art has drawn on GANs (generative adversarial networks) to envision non-normative, including neurodivergent, subjects that challenge taken-for-granted understandings of human experience and capability. The presumption of a universal standard or normative model, against which “deviance” or “deviation” is measured, is nearly always implied to be white, cis-gendered, middle-classed, and physically and cognitively abled. That fiction of the universal subject–of what disability scholar and activist Rosemarie Garland-Thomson terms the “normate”–has historically shaped everything from medical practice and civil rights laws to built environments and educational institutions. It also often continues to inform technologies’ development and perceived market viability and use-value. Representations of “human-centered” technology that include those with mental or physical disabilities often call for a divestment from these usual ways of thinking and creating. Such a direct critique is posed in art exhibitions such as Recoding CripTech. As the curatorial statement puts it, the installations reimagine “enshrined notions of what a body can be or do through creative technologies, and how it can move, look or communicate. Working with a broad understanding of technology . . . this multidisciplinary community art exhibition explores how disability–and artists who identify as such–can redefine design, aesthetics and the relationship between user and interface.” Works included in Recoding CripTech that employ artificial intelligence, such as M Eifler’s “Prosthetic Memory” and “Masking Machine,” suggest a provocative reframing of “optimization” or “functionality” in technologies that propose to augment the human experience.19

Race–racism–is a device. No More. No less. It explains nothing at all. . . . It is simply a means. An invention to justify the rule of some men over others. [But] it also has consequences; once invented it takes on a life, a reality of its own. . . . And it is pointless to pretend that it doesn’t exist–merely because it is a lie!

—Tshembe in Les Blancs (1965) by Lorraine Hansberry

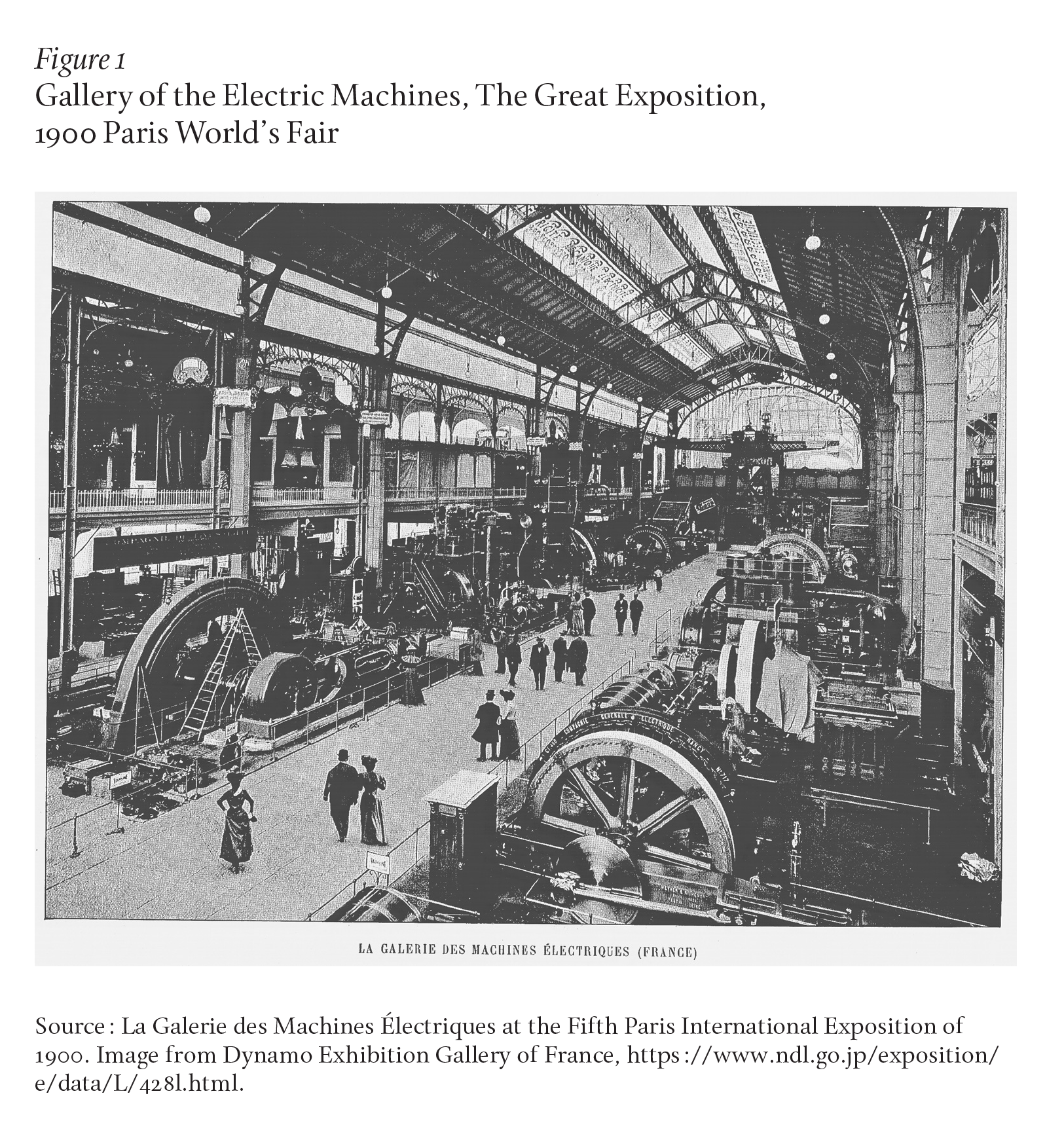

Rashaad Newsome’s installation Being represents another artistic provocation that reframes both the form and content of traditional technological historiographies often told from that “view from nowhere.” Newsome, a multimedia artist and activist, makes visible the erased contributions to technology and art by people of African descent. Newsome terms the interactive social humanoid Being 2.0 an AI “griot,” a storyteller. But unlike most social robots commanded to speak, Being is intentionally “uppity”: wayward, noncompliant, disobedient, with expressive gestures drawn Black Queer vogue dance repertoire meant as gestures of decolonial resistance to the labor and service that social robots are expected to perform. It upends the historical association of robots and slaves (in the etymology of the Czech word, “robot” translates to “slave”) in movement, affect, function, and speech. Taking aim at the limited training data sets used in natural language processing, Newsome draws on broader archives that include African American vernacular symbolic systems.20 And since language carries cultural knowledge, Being’s speech expands not just vocabularies but reimagines how the standardized expressions of emotion and behavior often deployed in AI are racially and culturally encoded.21 In fact, Being is an attempt to redress the historical violence of antiquated notions about race, the more disturbing because the representations of race, reduced to seemingly self-evident graduations of color and physiognomy, are being actively resurrected in AI development and application.

Race is always a negotiation of social ascription and personal affirmation, a process of what sociologists Michael Omi and Howard Winant term “racial formation.” Omi and Winant refer to racial formation as a way of historicizing the practices and circumstances that generate and renew racial categories and racializing structures:

We define racial formation as the sociohistorical process by which racial categories are created, inhabited, transformed, and destroyed. . . . Racial formation is a process of historically situated projects in which human bodies and social structures are represented and organized. Next we link racial formation to the evolution of hegemony, the way in which society is organized and ruled. . . . From a racial formation perspective, race is a matter of both social structure and cultural representation.22

The expression “racial formation” is therefore a reminder that race is not a priori. It is a reminder to analyze the structural and representational–not just linguistic–contexts in which race becomes salient: the cultural staging, political investments, institutional systems, and social witnessing that grant meanings and values to categories. A full accounting of race therefore involves asking in whose interest is it that a person or people are racialized in any given moment in time and space?

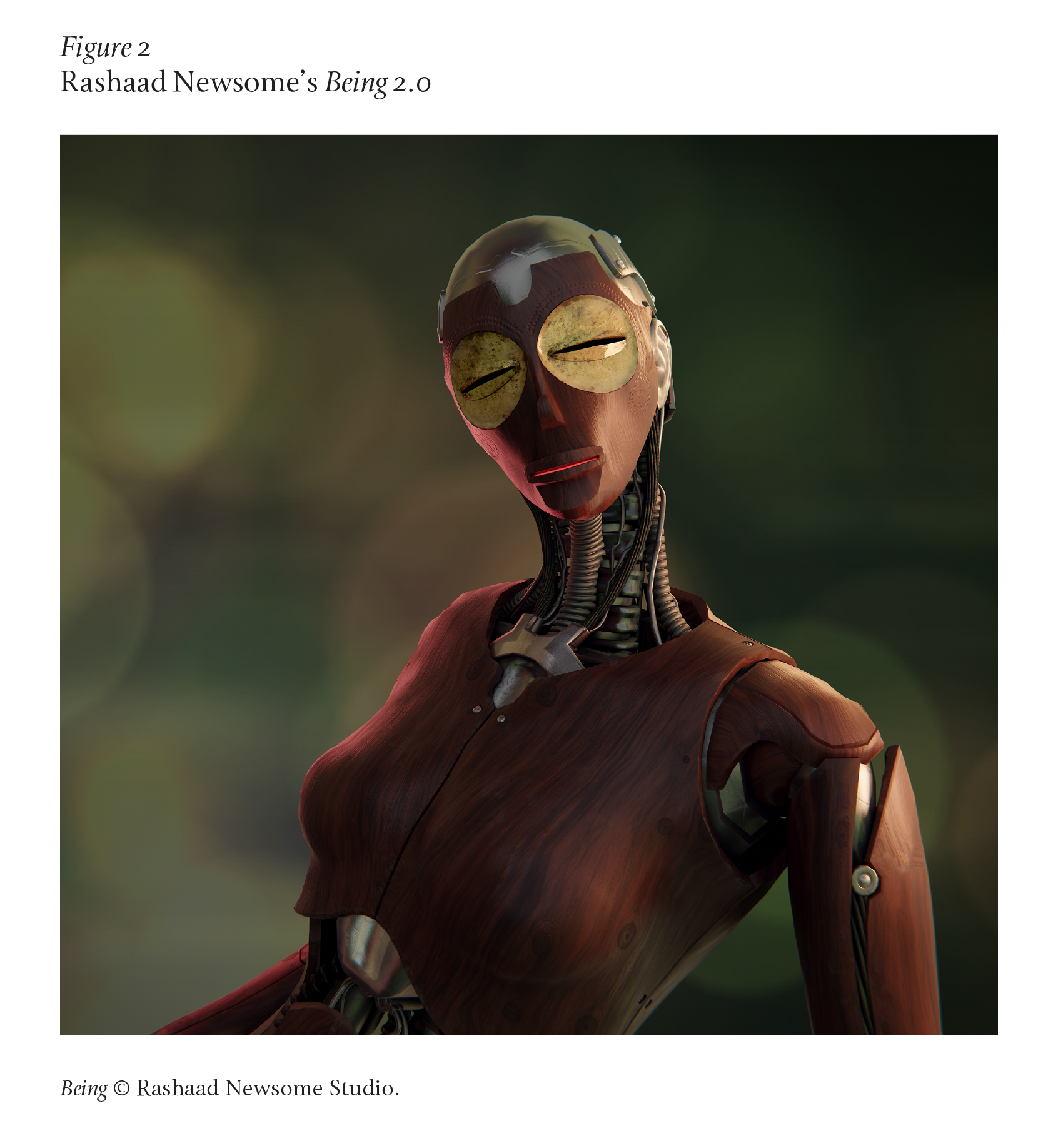

Overlooked, for instance, in many debates over racial bias, surveillance, and privacy in facial recognition technology is the practice of coding “race” or “ethnicity” as fixed, static programmable variables, something writ on the face or otherwise available as physically intelligible–an outdated approach to race that harkens back to nineteenth-century phrenology and other pseudoscience mappings of racial traits. Moreover, that practice renders opaque how categories are never merely descriptive, disinterested renderings of facts or things even though they cannot be purged of the value systems that animate their creation and make them intelligible for technological use–at least as currently developed–in the first place. Additionally, the claim to a universal objectivity is one of the “epistemic forgeries,” according to Yarden Katz, who describes it as one of the “fictions about knowledge and human thoughts that help AI function as a technology of power” because it enables “AI practitioners’ presumption that their systems represent a universal ‘intelligence’ unmarked by social context and politics.”23 That drive for comprehensive typing and classification, for a universal compendium, cannot easily accommodate race other than a technical problem in mapping variation of types.24

To illustrate why AI representations are so problematic, let me take a seemingly innocuous example in the new algorithmic application “Ethnicity Estimate,” part of the Gradient app, which purports to diagnose percentages of one’s ethnic heritage based on facial recognition technology (FRT). Such an app is significant precisely because popular data-scraping applications are so often pitched as convenient business solutions or benign creative entertainment, bypassing scrutiny because they seem so harmless, unworthy of research analysis or quantitative study. Critically examining on such issues would be a direct impediment to a seamless user experience with the product, thus designers and users are actively disincentivized from doing so. Like many such applications, Ethnicity Estimate problematically uses nationality as a proxy for ethnicity and reduces population demographics to blood quantum.

Or consider Generated Photos: an AI-constructed image bank of “worry-free” and “infinitely diverse” facial portraits of people who do not exist in the flesh, which marketers, companies, and individuals can use “for any purpose without worrying about copyrights, distribution rights, infringement claims or royalties.”26 In creating these virtual “new people,” the service offers a workaround for privacy concerns. Generated Photos bills itself as the future of intelligence, yet it reinscribes the most reductive characterizations of race: among other parameters users can define when creating the portraits, such as age, hair length, eye color, and emotion through facial expression, the racial option has a dropdown of the generic homogenizing categories Asian, African American, Black, Latino, European/white.

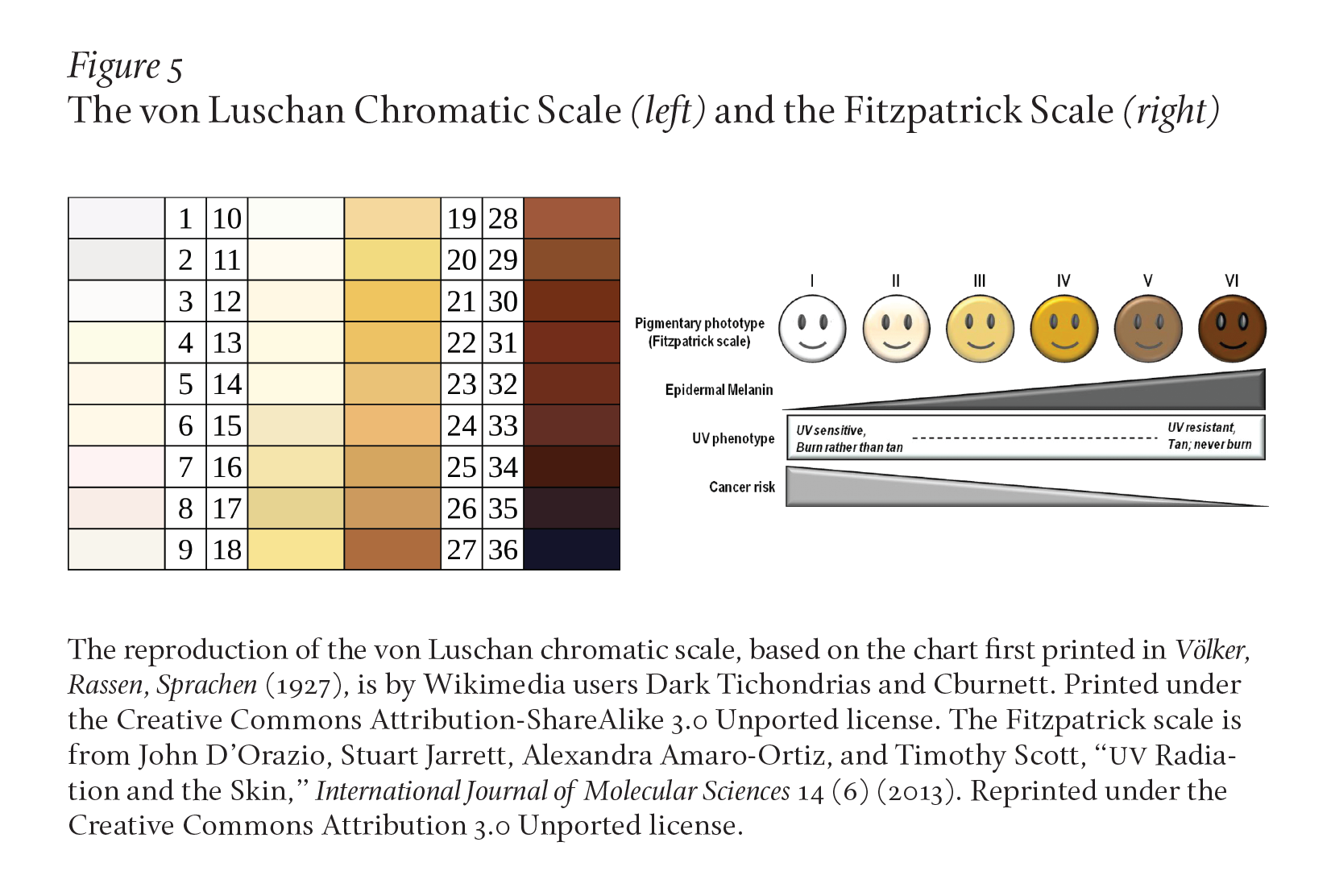

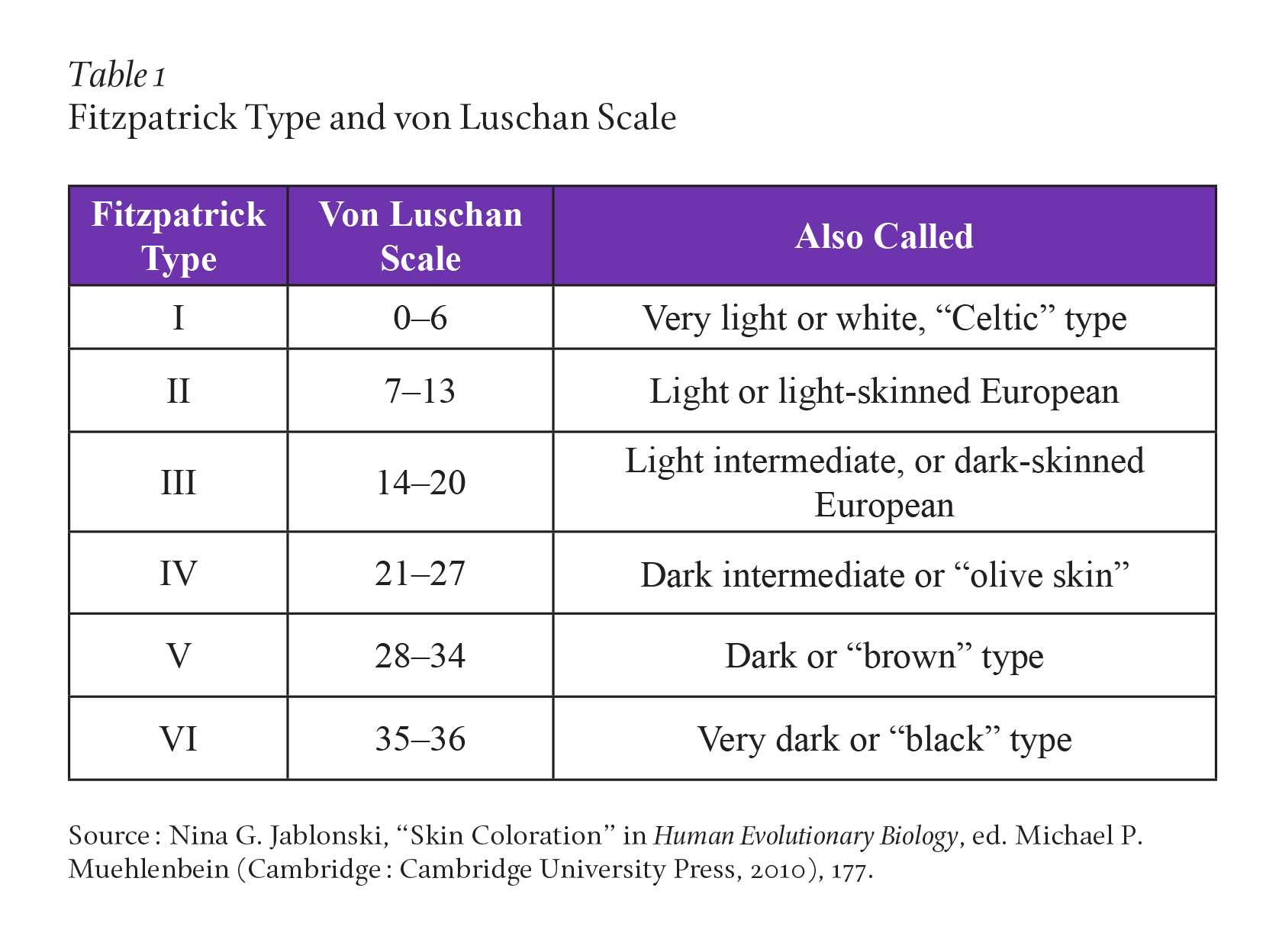

Skin color options are similarly presented as self-evident and unproblematic givens, a data set based on an off-the-shelf color chart. There is a long racializing history of such charts, from the von Luschan chromatic scale, used throughout the first half of the twentieth century to establish racial classifications, to the Fitzpatrick scale, still common in dermatologists’ offices today, which classifies skin types by color, symbolized by six smiling emoji modifiers. Although the latter makes no explicit claim about races, the emojis clearly evoke the visuals well as the language of race with the euphemism of “pigmentary phototype.”

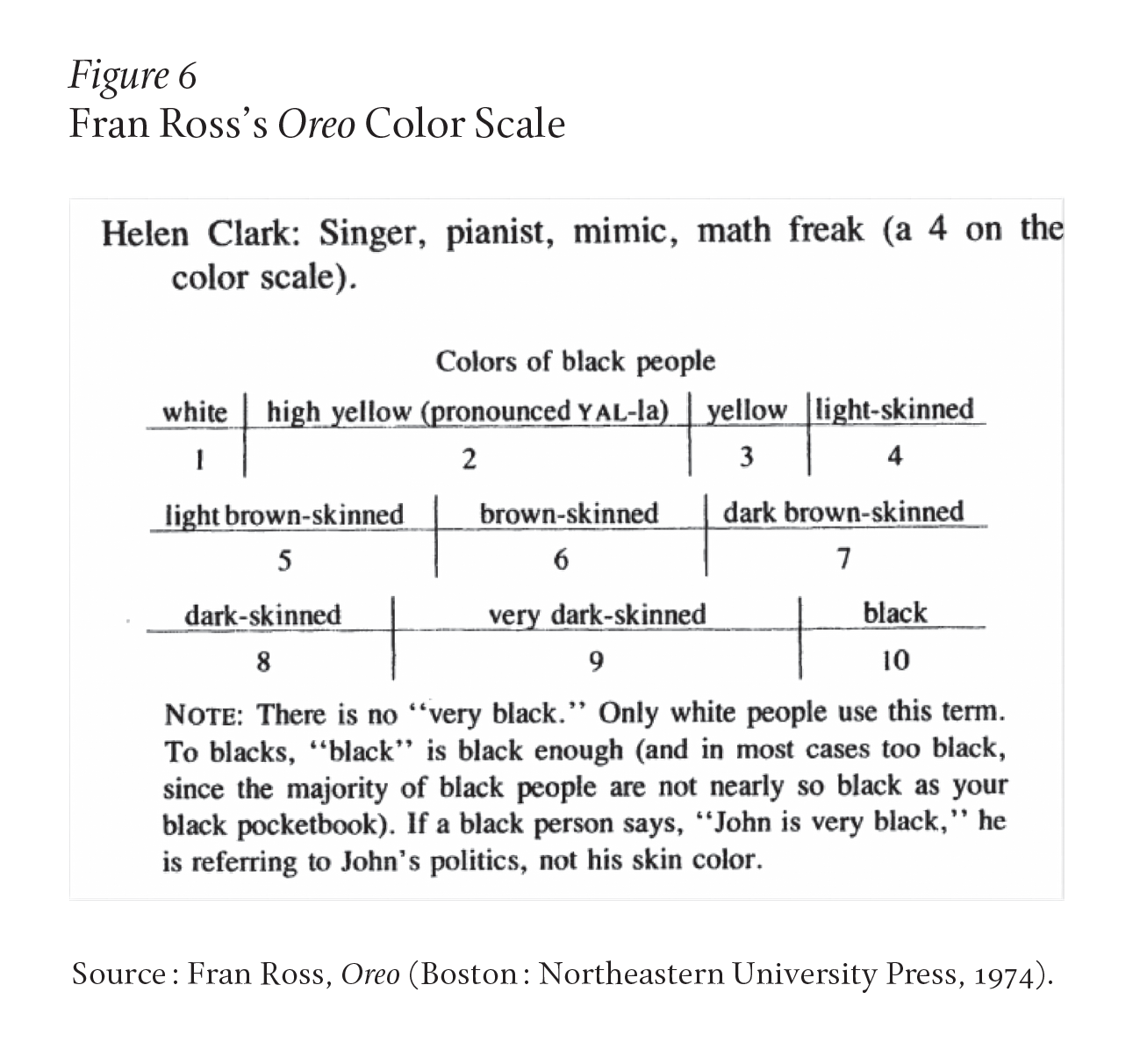

All these types are readily serviceable as discrete data points, which makes them an easy go-to in algorithmic training, but the practice completely elides the fact that designations of “dark” or “light” are charged cultural and contextual interpretations that are always negotiated in context and in situ.25 The relevance and meaning of race emerge through social and cultural relations, not light frequencies. Fran Ross’s brilliant, satirical novel Oreo (1974) offers a wry send-up of attempts to apply color charts to social identities, shown as Figure 6.26

Although new AI technologies show promise in diagnosing medical conditions of the skin, thinking of racial identification primarily in terms of chromatic scales or dermatoscopic data deflects attention, to put it generously, from the long history of the damaging associations of skin color and race that gave rise to early technologies like this in the first place, whether it was the “science” of phrenology, IQ tests, or fingerprinting, and with implications, more recently, for the use of biometrics.27 At a minimum, it ignores the imbrication of “race” in pigmentocracies and colorism, the historical privileging of light skin, and the various rationales for identifying what counts as “light-skinned.” Colorism, a legacy of colonialism, continues to persist in contemporary hierarchies of value and social status, including aesthetics (who or what is ranked beautiful, according to white, Western standards), moral worth (the religious iconography of “dark” with evil and “light” with holy continues to saturate languages), social relations (for instance, the “paper bag test” of the twentieth century was used as a form of class gatekeeping in some African American social institutions),28 and the justice system (since social scientists have documented the perceptual equation of “blackness” with crime, and thus those perceived as having darker skin as a priori criminally suspect).29

Why does this matter? Because it suggests that the challenges in representing race in AI are not something technological advances in any near or far future could solve. Rather, they signal cultural and political, not technical, problems to address. The issue, after all, is not merely a question of bias (implicit or otherwise), nor of inaccuracy (which might lead some to think the answer is simply the generation of more granular categories), nor of racial misrecognition (which some might hear as simply a call for ever more sophisticated FRT), nor even of ending all uses of racial categorization.30 It matters because algorithms trained on data sets of racial types reinforce color lines, literally and figuratively remanding people back in their “place.” By contrast, as I have suggested, the increasingly influential rise of AI artist-technologists, especially those of color, are among those most dynamically questioning and reimagining the commercial imperatives of “personalization” and “frictionlessness.” Productively refusing colorblindeness, they represent race, ethnicity, and gender not as normative, self-evident categories nor monetizable data points, but as the dynamic social processes–always indexing political tensions and interests–which they are. In doing so, they make possible the chance to truly create technologies for social good and well-being.

Something has happened. Something very big indeed, yet something that we have still not integrated fully and comfortably into the broader fabric of our lives, including the dimensions–humanistic, aesthetic, ethical and theological–that science cannot resolve, but that science has also (and without contradiction) intimately contacted in every corner of its discourse and being.

—Stephen Jay Gould, The Hedgehog, the Fox, and the Magister’s Pox (2003)31

I cite what may seem minor examples of cultural ephemera because, counterintuitively, they hint at the grander challenges of AI. They are a thread revealing the pattern of “something very big indeed,” as historian of science Stephen Jay Gould put it. Certainly there are ethical, economic, medical, educational, and legal challenges facing the future of AI. But the grandest technological challenge may in fact be cultural: the way AI is shaping the human experience. Through that lens, the question becomes not one of automation versus augmentation, in which “augmenting” refers to economic productivity, but rather to creativity. That is, how can AI best augment the arts and humanities and thus be in service to the fullness of human expression and experience?

This essay opened with Henry Adams’s moment of contact with the Dynamo’s “silent and infinite force,” as he put it, which productively denaturalizes the world as he knows it, suspends the usual epistemological scripts about the known world and one’s place in it. It is a sentiment echoed almost verbatim two hundred years later by Gould, witnessing another profound technological and cultural upending. Writing at the turn into our own century, Gould, like Adams, cannot fully articulate the revelation except to say poignantly that “something has happened,” that every dimension of “the broader fabric of our lives” is intimately touched by a technology whose profound effect cannot be “solved” by it. That liminal moment for Adams, for Gould, and for us makes space for imagining other possibilities for human creativity, aesthetic possibilities that rub against the grain and momentum of current technological visions, in order to better realize the “magisteria of our full being.”32

© 2022 by Michele Elam. Published under a CC BY-NC 4.0 license.