Small Machines

Over the last fifty years, small has emerged as the new big thing. The reduction of information and electronics to nanometer dimensions has revolutionized science, technology, and society. Now scientists and engineers are creating physical machines that operate at the nanoscale. Using approaches ranging from lithographic patterning to the co-opting of biological machinery, new devices are being built that can navigate, sense, and alter the nanoscale world. In the coming decades, these machines will have enormous impact in fields ranging from biotechnology to quantum physics, blurring the boundary between technology and life.

Look round the world, contemplate the whole and every part of it: you will find it to be nothing but one great machine, subdivided into an infinite number of lesser machines, which again admit of subdivisions to a degree beyond what human senses and faculties can trace and explain. All these various machines, and even their most minute parts, are adjusted to each other with an accuracy which ravishes into admiration all men who have ever contemplated them. The curious adapting of means to ends, throughout all nature, resembles exactly, though it much exceeds, the productions of human contrivance; of human design, thought, wisdom, and intelligence.

– David Hume, Dialogues Concerning Natural Religion, 1779

How small can we make things? The physicist, scientific raconteur, and future Nobel Prize recipient Richard Feynman asked this question in December 1959. In a now-famous talk at the annual meeting of the American Physical Society, he took that very simple question and followed it to its end. His talk helped define the field of nanotechnology. With insight and precision, Feynman clearly outlined the promises and challenges of making things small. It was a clarion call, shaping the field of nanoscience over the next fifty years – a field that has, in turn, reshaped the world.

The program of miniaturization that Feynman outlined in 1959 can be divided into three main parts:

- miniaturization of information;

- miniaturization of electronics; and

- miniaturization of machines.

Parts 1 and 2 of the revolution were already under way by the time Feynman gave his speech. (He was as much a reporter as a visionary.) Companies like Fairchild Semiconductor in the San Francisco Bay area and Texas Instruments in Austin were making transistors and assembling them into integrated circuits. Gordon Moore, founder and former CEO of Intel, would soon begin counting the number of transistors per chip, noticing the number was doubling every year or so. He predicted that the trend would continue for at least another decade.

After five decades of Moore’s Law, we now have computers with 25 nanometer (nm) feature sizes. To put that in perspective, with a 25 nm pen one could draw a map of the world on a single Intel wafer, recording features down to the scale of an individual human being. Furthermore, running at a 2 gigahertz clock speed, a computer can perform as many operations in a second as a human can have thoughts in a lifetime.

As the integrated circuit shrank, a second revolution was taking place in data storage, with magnetic-core memories, hard drives, and flash memories steadily pushing down the size of a bit of data. Information is now pervasive, and it is small, with bit sizes measured in tens of nanometers. We are awash in digital information – zettabytes of it – adding the equivalent of a hundred thousand books every year to the global bookshelf for every man, woman, and child on the planet. In this age of big data, terabytes of information are available on everything from human genome sequences to astrophysical maps of stars and galaxies.

The miniaturization of computing and information storage is the most important technological development of the last half-century. But what about Part 3 of Feynman’s program? Where are the nanomachines? And what exactly do we mean by nanomachine?

Simply stated, a machine is a device that accomplishes a task, usually with an input of energy and/or information. By this definition, a computer is a machine; so for our purposes, we’ll narrow the definition to a device that operates when something physical moves. Such a machine can be as simple as a vibrating reed or as complex as an automobile. To be a nanomachine, it should be submicron, at the very edge of what can be resolved in a standard optical microscope.

In the last decade, we have seen critical advances in both the scientific underpinnings and the fabrication technologies needed to create, study, and exploit nanomachines. These miniature devices translate or vibrate, push or grab; they record, probe, and modify the nanoscale world around them. Progress has come from two very different approaches, each bringing its own ideas, themes, and materials to bear. The first approach, which I call the lithographer’s approach, adopts the techniques of the microelectronics revolution discussed above. The second, the hacker’s approach, seeks to appropriate the molecular machinery of life. I examine these approaches in turn and consider what is happening at the interface of these two disciplines. Finally, I look at emerging approaches that will take nanomachinery to the next level. My goal is not to offer a comprehensive survey, but rather to present a few snapshots that give a sense of the current state of the field as well as where it might be headed.

* * *

If quantum mechanics hasn’t profoundly shocked you, you haven’t understood it yet.

– Niels Bohr

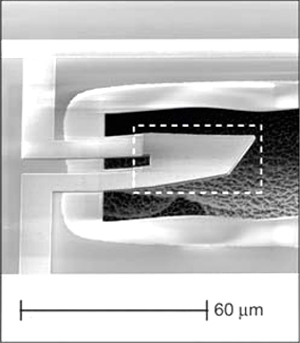

In 2010, physicists at the University of California, Santa Barbara, created what some have called the first quantum machine: that is, a machine whose operation follows the laws of quantum mechanics (see Figure 1).1 It is the latest breakthrough to emerge from the lithographer’s approach to nanofabrication. This technology, usually called MEMS (MicroElectro-Mechanical systems) or NEMS (Nano-ElectroMechanical systems), exploits and extends the lithographic, thin-film deposition, and etching techniques of the microelectronics industry to make machines that move. The field of MEMS, which has roots stretching back to the 1980s, is now a $10 billion/year industry, with products ranging from accelerometers in airbags, microfluidic valves for lab-on-a-chip systems, and tiny mirrors for steering light in projectors. For example, Apple’s iPhone 4 has two MEMS microphones and a three-axis MEMS gyroscope that detects when the phone rotates.

Figure 1

A Quantum Machine

Created by a team of physicists at the University of California, Santa Barbara, this nanomachine was the first to demonstrate quantum behavior in a mechanical system. Source: Aaron D. O’Connell et al., “Quantum Ground State and Single-Phonon Control of a Mechanical Resonator,” Nature 464 (2010): 697; used here with permission from the authors.

The field of NEMS pushes this approach to its limits, using advanced lithography to create mechanical devices with dimensions comparable to those found in the smallest integrated circuits. The reason is not simply miniaturization for its own sake: the rules of operation of nanomachines can be fundamentally different than their larger-scale counterparts. In particular, the possibility of seeing quantum behavior in nanoscale machines has tantalized and energized the field of NEMS for more than a decade. The counterintuitive rules of quantum mechanics – quantized energies, quantum tunneling, zero-point fluctuations, and the Schrödinger’s cat paradox – have been tested with electrons and photons for nearly a hundred years. But can the rules of quantum mechanics also manifest in mechanical machines?

The UC-Santa Barbara group showed that the answer is yes. They used a vibrating nanoscale beam, one of the simplest possible nanomachines. According to the rules of quantum mechanics, the amplitude of the object’s vibration is quantized, just like the orbits of electrons in an atom. Furthermore, even when cooled to absolute zero, the beam should exhibit quantum fluctuations in its position. A number of research groups have explored various geometries of resonators to try to reach these quantum limits, along with various techniques to detect the beam’s miniscule motion.

The physicists at UC-Santa Barbara found success using a novel oscillating mode of the beam and a clever detection scheme involving a quantum superconducting circuit. They were able to cool the oscillator to its ground state, before adding a single vibrational quantum of energy and measuring the resulting motion. They were even able to put the device in a quantum superposition, where it was simultaneously in its ground state and also oscillating, the two possibilities interfering with each other like Schrödinger’s dead-and-alive cat. Science magazine chose this experiment as its Breakthrough of the Year in 2010: the first demonstration of quantum behavior in a mechanical system.

So what? Why should we care about quantum machines? The first reason is pure curiosity, the drive to show that physical machines are also subject to quantum rules. But these devices may also have applications in the field of quantum information, where the digital bits of a computer are handled as quantum objects. They also stretch the boundaries of physical law, testing macro laws at the nanoscale and nano laws at the macro scale.

For example, a number of theories have posited that gravity may act differently on small objects. Can these effects be detected in a nanoscale oscillator? Moving in the other direction, is there a scale at which the rules of quantum mechanics cease to work, thereby forcing a revision of the fundamental laws of quantum theory? These are scientific long shots; but a measurement that changed our notions about gravity or quantum mechanics would profoundly challenge our understanding of the workings of the universe.

* * *

Over the next 20 years synthetic genomics is going to become the standard for making anything.

– Craig Venter

The lithographer’s nanomachines, for all their progress, pale in comparison to the machines of life. The MEMS devices in your iPhone cannot begin to match the complexity and sophistication of the simplest bacterium. So how far are we from building something more like a bacterium – say, a nanosubmarine that can travel through the bloodstream, searching out cancer cells? Or even better, how long until we have a machine that can make copies of itself, growing exponentially until there are trillions? We lithographers can only shake our heads in awe at the power of biology. Just one simple mechanical component – for example, the rotary motor that powers the flagellum of a bacterium – is far beyond our abilities. A lithography-based technology that would even remotely match the capabilities of life is decades away at best. But what if we don’t want to wait for decades? What if we want our nanosubmarines now?

There is a shortcut, a hack, and one that humans have exploited before. Before humans could build tractors, they harnessed oxen to pull their ploughs. We already have one fully functioning nanotechnology: life. So why not hack it? Never mind decades of developing ever-more complex machines by lithographic processes and teaching them to work together in more sophisticated systems; just take control of the bacterium the way we did livestock. This is the dream of synthetic biology: to take unicellular life, harness it, reprogram it, and control its design down to the last amino acid.

Synthetic biology is the latest link in a long chain spanning from the domestication of animals, to the invention of farming, to animal and plant breeding, and on to genetic engineering. But synthetic biology aims to advance to the next level, albeit at the scale of the bacterium. The goal is to usurp cellular biological machines, to turn life into an engineering discipline. Instead of simply tinkering, one would create cells in the same way that an integrated circuit is assembled, mixing and matching motors and metabolic pathways, all programmed in the language of DNA. Sit at a computer, type out a genetic code, push a button, and see your dream of life come to be.

Researchers at the J. Craig Venter Institute in Maryland took a major step forward in 2010, creating what they called the first artificial organism.2 The project was a tour de force; it took more than a decade and cost tens of millions of dollars. First, the group painstakingly built the genome of a known bacterium from scratch, synthesizing and stitching together the strands of DNA until they had reproduced the entire operating instructions for a cell. To prove ownership, they added a few genetic watermarks, including their names and quotes from James Joyce (“To live to err, to fall, to triumph, to recreate life out of life”) and Richard Feynman (“What I cannot build I cannot understand”), all rendered in the ACGT (adenine, cytosine, guanine, and thymine) alphabet of life. Next, they put that genome into the shell of another bacterium, Mycoplasma mycoides, whose own genome had been removed. After some jiggering, they got the new organism to boot up, creating what they claimed as the first artificial life form. While many have criticized the work as overly hyped, it is nonetheless a landmark in synthetic biology, a demonstration of what is possible. It was voted as one of the runners-up for the 2010 Breakthrough of the Year by Science magazine, losing out to the quantum machine from the team at UC-Santa Barbara.

What are such artificial life forms good for? If one believes Craig Venter’s quote at the start of this section, it appears that there is little they wouldn’t be good for. The practitioners of synthetic biology are working to reprogram organisms to make everything from cheap malaria medications to biofuels. The promise is great, but the task is much harder than it seems. The giddy early days of the field are giving way to an appreciation of the complexity and finicky nature of biological organisms. Synthetic biologists have schemes to fix this, such as devolving life to a version simple enough for the genetic programmer to exert full control. They are stripping down simple bacteria to the minimal state needed to survive, where all the remaining parts and their interrelationships are fully understood.

Hacking life stirs up fear as well as hope. Venter’s dictum of synthetic biology as the standard for making everything could also include new and dangerous pathogens. What’s to stop a synthetic biologist from accidentally creating a dangerous organism, or a terrorist from knowingly creating one? On one hand, the danger may be overstated. The world is rife with nanomachines trying to kill us: viruses and bacterial infections have been perfecting their skills for eons. It is no trivial matter to come up with something truly new that our bodies could not handle. On the other hand, we do know that parasites and hosts coevolve, tuning their responses to each other in a delicate dance. The introduction of a known organism in a new setting could trigger dramatic rearrangements of these ecological relationships.

* * *

The machine does not isolate man from the great problems of nature but plunges him more deeply into them.

– Antoine de Saint-Exupéry

A new class of nanomachines is emerging that combines the best of the lithographer’s and the hacker’s approaches. These machines marry the speed and processing power of microelectronics and optics with the functionality of biological machines. The most dramatic examples are found in the field of DNA sequencing. The promise is easy to see: the human genome consists of three billion bases. What if we could read the genetic code at the speed of a modern microprocessor? A genome could be sequenced in seconds.

Nearly all next-generation sequencing techniques involve the careful integration of biological genomic machinery with electronic/optical elements to read and collect the data. One such technique is called SMRT, or Single Molecule Real Time sequencing; it was invented at Cornell University in 2003 and developed commercially by Pacific Biosciences.3 A nanofabricated well is created that confines light to a very small volume containing a single DNA polymerase, the biological machine that constructs double-stranded DNA. As the polymerase adds new bases to the DNA strand, a characteristic fluorescence signal is emitted that indicates the identity of the genetic letter. This technique can be run in massively parallel fashion, with millions of wells simultaneously monitored. Other approaches under commercial development include the electronic detection of hydrogen ions released during synthesis, or the detection of the change in current measured when DNA threads itself though a nanopore.

These next-generation technologies have helped put DNA sequencing on a hyperspeed version of Moore’s law. The first human genome cost a few billion dollars to sequence. As recently as the beginning of 2008, the cost was approximately $10 million. Now, four years later, the cost is closer to $10,000, a thousandfold drop in approximately four years! This remarkable drop in sequencing cost (and a steady drop in DNA synthesis cost) is redefining the possible, outperforming Moore’s law for electronics by leaps and bounds. These hybrid organic-inorganic nanomachines are revolutionizing the biological sciences, turning genomic sequencing into what has been described as an information microscope with the ability to address questions in fields ranging from molecular biology to evolution and ecology. Next up is the $100 genome, which will likely set off a revolution in personal genomics. It would make whole genome sequencing as common as knowing your blood type. Medicines and procedures could then be tailored to fit specific genomic profiles.

In the last decade, new kinds of hybrid two-dimensional materials have appeared that bring electronics and biology closer together. These atomically thin materials combine many of the attributes of biological and microelectronic materials in a single platform. The most widely touted is graphene, the hexagonal arrangement of carbon atoms that is found in pencil lead. Individual sheets of graphene were isolated in 2004, and this work led to a Nobel Prize a scant six years later. These sheets combine electronic and optical properties that rival the best semiconductors and metals, but physically they are as flexible as a biological membrane. Scientists, including my own group, have already used them to create nanoscale resonators analogous to the quantum machines described above; other groups have created nanopores in these membranes that can be used to detect the translocation of DNA (Figure 2). Currently, the reach of lithography stops at approximately 20 nm, while a strand of DNA is approximately 2 nm across. These new materials help bridge that gap: the first atomic-scale materials that embody the full power of the microelectronics revolution but that can interact with biological molecules on their own terms.

Figure 2

Artist’s Rendition of a Graphene Nanopore DNA Sequencer

DNA passing through a hole in a one atom–thick graphene sheet changes the flow of ions around it in a way that can be used to determine the DNA’s genetic sequence. Image courtesy of the Cees Dekker lab at TU Delft/Tremani.

* * *

The mechanic should sit down among levers, screws, wedges, wheels, etc. like a poet among the letters of the alphabet, considering them as the exhibition of his thoughts; in which a new arrangement transmits a new idea to the world.

– Robert Fulton, 1796

Biology takes a very unusual approach to the creation of nanomachine parts: it folds them out of strings. When the ribosome makes a new part, it first creates a linear, one-dimensional amino acid biopolymer. That biopolymer, guided by the amino acid sequence encoded in its structure (and sometimes with the help of other machines), folds itself into useful forms. A conceptually similar approach is taken by the practitioners of the ancient art of origami, in which complex three-dimensional objects emerge from the folding-up of a two-dimensional sheet of paper.

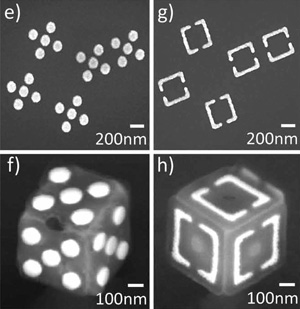

To the nanotechnologist, this approach has much to recommend it. It allows a planar fabrication technology like lithography, or a linear fabrication technology like DNA synthesis, to be the basis for constructing more complex three-dimensional systems. In the field of MEMS, engineers have been applying origami-like techniques for some time to create, for example, arrays of steerable mirrors that look like something from a miniature pop-up book. The Gracias research group at Johns Hopkins University is pushing this technique to the nanoscale, lithographically patterning 100-nm scale planar features connected with tin solder at the joints that, when heated, fold up the structure (Figure 3).4 My own research group is attempting to perform similar origami tricks using atomically thin graphene membranes.

Figure 3

Lithographic Origami

Panels are patterned using multistep lithography that subsequently folds into micron-sized cubes with novel physical, chemical, or electromagnetic properties. Source: Jeong-Hyun Cho et al., “3D Nanofabrication: Nanoscale Origami for 3D Optics,” Small 7 (14) (2011): 1943; used here with permission from the authors.

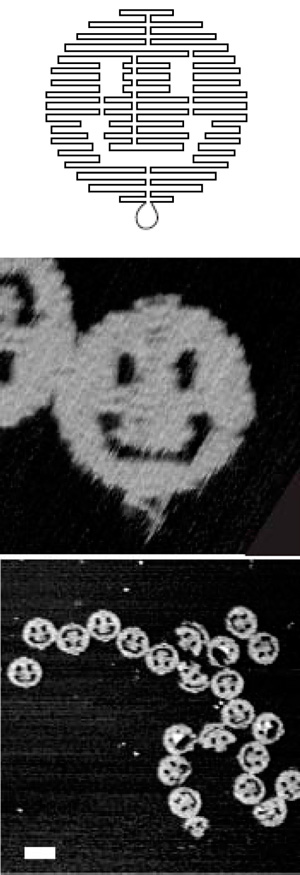

The hackers have also gotten into the origami game, with great success. The field was pioneered by New York University’s Ned Seeman, who designed short DNA sequences that assembled themselves into interesting shapes. In 2006, Paul Rothemund at Caltech took DNA origami to the next level. He developed algorithms to form arbitrary two-dimensional patterns in DNA, from happy faces to maps of the world.5 His approach begins with a single long “raster scan” DNA strand that forms the basic pattern, to which a number of short staple strands are designed to pin the structure together. Assembly involves mixing all the strands then heating and cooling the mix, after which it assembles itself. To demonstrate the technique, Rothemund made smiley faces by the billions, creating what has been called “the most concentrated happiness ever experienced on Earth” (see Figure 4). This set off a wave of research on DNA origami projects, including one project with robotic DNA spiders that travel a DNA origami landscape.6 More recent work by the Church research group at Harvard University’s Wyss Institute for Biologically Inspired Engineering is taking this technology toward clinical applications. Church and colleagues have created a DNA origami robot that could passively carry a payload and then release it when certain molecular signatures are encountered on the surface of a cell, a kind of smart land mine that could be deployed to attack cancerous cells. It may not be the nanoscale submarine of the nano-technologist’s fantasies, but it is certainly another step forward.

Figure 4

DNA Origami

DNA sequences can be designed so that they fold themselves into arbitrary two-dimensional shapes; here they are shown creating smiley faces 200 nm across. Source: Paul W.K. Rothemund, “Folding DNA to Create Nanoscale Shapes and Patterns,” Nature 440 (2006): 297; used with permission from the author.

* * *

Ever tried. Ever failed. No matter. Try again. Fail again. Fail better.

– Samuel Beckett

Fifty years after Richard Feynman’s speech to the American Physical Society, nanomachines are finally moving from dream to reality. Young scientists are flocking to the field, drawn by the promise of a new technology that is progressing in leaps and bounds. In the area of synthetic biology, the International Genetically Engineered Machine (iGEM) competition, an undergraduate competition in synthetic biology, is entering its eighth year, with more than a hundred teams vying to build the coolest organism possible. The winner of the 2011 competition, a team from the University of Washington, created a strain of E. coli that could make the alkane components of diesel fuel. In the area of nano-bots, the National Institute of Science and Technology sponsors a Mobile Microrobotics Challenge, where dust mote–sized robots compete to push tiny soccer balls into goals.

So what about the ultimate: will we soon be building nanomachines that could genuinely be called a second form of life – machines that can build copies of themselves from raw materials, machines that can change and evolve? How will we do this? The obvious answer is to look to life for inspiration. But here we are confronted with a remarkable fact: we do not know how life did it. Life is a complex and interwoven technology, and no one yet understands how it bootstrapped itself into being. Life has two important parts, metabolism and replication. Did one part come first, or did they emerge together? Did life emerge from self-replicating rna molecules, or did it develop metabolism and later add an information-containing molecule? It is one of the great outstanding questions in science; its answer may both shape and be shaped by advances in nanomachine design. Recall Feynman’s quote: What I cannot build I cannot understand. The lessons learned as we try to build ever-more sophisticated nanomachines will almost certainly inform our understanding of the origins of life, and vice versa.

Back in the 1960s, the semiconductor industry joined together what had been disparate fields, marrying electronics to information/computation. Today, they are so closely connected in our minds that it is hard to disentangle them. We are currently crossing a similar threshold. Fifty years from now, nanomachines will likely be pervasive, with the boundary between the lithographic and hacked forms evermore difficult to distinguish. They will be inside us and outside of us. We will be studying their evolution and ecology. My guess is that we will have solved the riddle of the origin of life – and will have created a few more examples of life in the process. We’ll have a hard time remembering that the fields of molecular biology and nanomachines were ever separate disciplines.

ENDNOTES

6 Kyle Lund et al., “Molecular Robots Guided by Prescriptive Landscapes,” Nature 465 (2010): 207.