When Law Calls, Does Science Answer? A Survey of Distinguished Scientists & Engineers

Sound legal decision-making frequently requires the assistance of scientists and engineers. The survey we conducted with the cooperation of the American Academy examines the views of the legal system held by some of the nation’s most distinguished scientists and engineers, what motivates them to participate or to refuse to assist in lawsuits when asked, and their assessment of their experiences when they do participate. The survey reveals that a majority of the responding scientists and engineers will agree to participate when asked, and when they turn down requests, the most common reasons are lack of time and absence of relevant expertise. Dissatisfaction with legal procedures is also a deterrent, but our respondents indicated that some procedural changes would make their participation more likely. In addition, participation appears to be associated with a greater belief in the ability of the legal system to deal well with scientific matters.

Access the online methodological appendix to the survey here.

Sound legal decision-making increasingly depends on sound science. Yet we know remarkably little about how scientists and engineers view the legal system or what leads them to decide whether and how to interact with it. Some commentary indicates that scientists regard the legal system with suspicion and discomfort, but the supporting evidence is largely anecdotal. As a result, it is hard to gauge how deep or widespread these reactions are, and – to the extent they exist – whether they are fueled by accurate information or false impressions. Getting a better handle on relationships between scientists and the law matters because the importance of science for law cannot be disputed.

Ideally, courts and litigants would be able to call on knowledgeable, unbiased scientists and engineers whenever the fair resolution of legal disputes depended on scientific or technical information. The importance of the science-law relationship led us, with the cooperation of the American Academy of Arts and Sciences, to conduct a survey of the Academy’s science and engineering members with the goal of providing empirical grounding for discussions about how scientists relate to law. Our survey probes scientists’ views of and expert involvement with the legal system, especially as it pertains to involvement in litigation, barriers to involvement, and legal or policy changes that might make scientists more willing to aid courts and lawyers when called upon.

The legal system has long recognized the value of scientific knowledge, and lawyers and judges have sought to make use of it, even while struggling to make sense of what science has to offer. The frustration is poignantly reflected in the words of Judge Baron Hatsell in 1699, when in a homicide trial he spoke to the jury about conflicting expert testimony on the cause of death of a young woman whose body was recovered from a lake:

The Doctors and Surgeons have talkt a great deal to this purpose, and of the waters going into the Lungs or the Thorax, but unless you have more skill in Anatomy than I, you won’t be much edified by it. I acknowledge I never studied Anatomy but I perceive that the Doctors do differ in their Notions about these things.1

Scientists, for different reasons, have their own difficulties with how the law goes about its business. As one of our respondents put it:

Science is about truth. The legal system is about spinning, distorting or suppressing the truth in order to win. The ethos of the two fields is fundamentally different. Even judges are biased and not objective. For these reasons, participation in the legal system is very frustrating for a scientist.

The challenge for the modern American legal system is obvious and increasing, as the frequency and complexity of encounters between science and law have multiplied with the dramatic expansion of legally relevant scientific knowledge. Courts and scientific societies have struggled with the tensions that exist.

Justice Stephen Breyer wrote in 1998 that the law “increasingly requires access to sound science.”2 Citing examples of cases on the U.S. Supreme Court’s docket, he identified a range of difficult legal problems that implicated scientific, medical, and engineering questions. In lower courts too, both civil and criminal, scientific claims, along with arguments about the quality of expert testimony, are expanding features of the legal landscape. Suits for injuries from chemical exposure, for example, may require evidence on exposure effects from scientists with expertise in chemistry, biology, epidemiology, and pathology; a bridge collapse or a patent dispute may require engineering and technological expertise; and DNA evidence is often key in identifying criminals and excluding innocent individuals from prosecution. Moreover, science does not stand still. New developments in genetics, neuroscience, material sciences, and other fields are entering into legal discourse, and claims and cases are beginning to turn on them. As science has become, if anything, more important to the fair resolution of legal disputes, the quality of scientific evidence in the courts continues to be the subject of controversy.

In 1993, the U.S. Supreme Court in Daubert v. Merrell Dow Pharmaceuticals highlighted the obligation of judges to act as gatekeepers responsible for keeping unreliable scientific evidence from being admitted in litigation.3 Following the Daubert decision, Judge Alex Kozinski, on remand, characterized the challenge for judges called upon to rule on the admissibility of expert scientific testimony:

[T]hough we are largely untrained in science and certainly no match for any of the witnesses whose testimony we are reviewing, it is our responsibility to determine whether those experts’ proposed testimony amount to “scientific knowledge,” constitutes “good science,” and was “derived by the scientific method.”4

As Judge Kozinski’s comments suggest and Justice Breyer’s later observations indicate, Daubert, although it put more gatekeeping power in the hands of the judge, has far from resolved the tensions that arise when science appears relevant to litigation.5

Scientific societies have also focused on the stresses that exist between science and the law, often through the lens of ethics.6 The American Psychological Association’s code of conduct, for example, specifically addresses issues that arise when psychologists are called on to serve in forensic capacities.7 The various, largely prosecution-oriented forensic sciences, spurred on by a critical National Academy of Sciences (NAS) report, have been working not only to increase the quality of their sciences but also to improve the accuracy and clarity of how forensic experts present their findings in court.8

A common explanation for complaints about the quality of the scientific evidence courts receive is the claim that “scientists tend to be leery of lawyers and the legal process, preferring not to venture into the courtroom.”9 Prior studies of experts in the American legal system provide some evidence of a disconnect between science and law, but the literature is sparse, consisting primarily of small surveys of testifying experts,10 and four important case studies, each discussing cases from the pre-Daubert era: one involving an examination of court documents and interviews with the participants in six criminal and three civil cases that included scientific evidence,11 and the other three analyzing court opinions in several cases involving statistical evidence.12 Our current survey was designed to examine evidence for some of the themes touched on in this prior research (for example, dissatisfaction with the quality of opposing experts and questions about judicial competence) and to go beyond the prior research in examining in greater detail the response of experts to the legal system.

We designed our survey, in conjunction with the American Academy of Arts and Sciences’ Public Face of Science project, to capture the views of distinguished scientists and engineers about the legal system and their experience with it. We surveyed scientists (including physical, biological, and social scientists) and engineers who were elected Fellows of the Academy.13 We asked them whether lawyers or judges had ever requested their advice, whether they had ever agreed to help if asked, why they were willing to help and why they refused to provide help if they declined, and what their experience was if they assisted, and we sought their views on various aspects of the legal system and the system as a whole. We also explored their future willingness to participate in the legal system, and asked them whether certain proposed changes in legal procedures would affect that willingness to participate. Finally, we sought to determine whether participation correlated with and perhaps affected views of the legal system.

We were particularly interested in understanding how the legal system interacts (or doesn’t) with the nation’s most respected scientists and engineers. Not only are these people likely to have the most to offer the legal system, but if they are seen as willing to engage with the legal system, younger scientists and engineers may be more likely to follow. To capture the views of highly respected scientists and engineers, we invited the members of the Academy in Class I (mathematical and physical sciences); Class II (biological sciences); and Class III (social sciences) to complete an online survey (n = 3328).14 We obtained 366 responses, a response rate of 11.0 percent. The response rate is not as high as we had hoped, but our data constitute what is by far the largest number of scientists and engineers ever surveyed on their experience with, and perceptions of, the legal system.

Our response rate is similar to the 12.1 percent response rate that was obtained in a recent survey that sought to learn what members of another organization of scientists, the American Association for the Advancement of Science, thought about the FBI and law enforcement.15 Hence we do not think the survey topic discouraged participation. To check for biases in our responding sample, we conducted a follow-up survey that could be answered in under five minutes, either by responding directly to questions on the email request or by going to a hyperlinked location like the one in the original survey. Two hundred fifty-three Academy members who had not responded to the original survey provided answers to this follow-up request. Those in our follow-up sample were similar to our sample respondents in gender, age, Academy class, whether they had ever been asked for assistance by the legal system, and how favorably they viewed the legal system. These similarities suggest that the experience and views of those who completed the initial full survey were not idiosyncratic. (See the methodological appendix posted at http://www.amacad.org/daedalus/whenlawcalls.) Moreover, this follow-up group gave us a larger total sample (n = 619) and a total response rate of 18.6 percent on which to examine participation rates and respondents’ overall evaluations of the ability of the legal system to deal with science.

We also looked at how representative our respondents were by comparing the gender, age, and Academy class distributions of all Academy members and the initial sample. The distributions in the population and sample were substantially similar in these three categories. Sample respondents included a somewhat higher proportion of women (24 percent versus 17 percent).16 And although the mean age in both the sample and population was seventy-one, the sample included a higher proportion of persons sixty-five or older (77 percent versus 69 percent) than is found in the overall population of Academy members.17 The overrepresentation of those over sixty-five in the sample may reflect the less busy lives of partially or fully retired scientists, as well as the possibility that those who have in the past participated or been asked to participate as experts were more likely to respond than those without such experience, with older scientists likely having accumulated more opportunities to participate. Also, Class III members (social scientists and attorneys) responded at a somewhat higher rate than their proportion in the population (33 percent of respondents versus 28 percent of the population).18 To see if these modest differences between the sample and population might distort our results, we conducted all analyses using both the unweighted responses and the responses weighted for gender, age, and class membership. Weighting did not change our results, so we use the unweighted data in presenting our findings.

While we cannot be certain that our sample respondents look like those Academy members who did not respond, there is little reason to suspect that the responses we received have serious relevant biases. Moreover, even if unknown biases exist, our survey sheds light on how a good proportion of the country’s most distinguished scientists regard and interact with the legal system.

A majority (54 percent) of our respondents reported that they had been asked to provide expert scientific or engineering advice at least once. More than one-third (38 percent) said they had been asked three or more times, and one in six (17 percent) reported receiving ten or more requests.19 If our nonrespondents were, as we expect, disproportionally people who were never asked for assistance, these rates are inflated; but note that a majority (60 percent) of respondents to our brief follow-up survey also said they had been asked for assistance. The request numbers suggest that the legal system approaches distinguished scientists and engineers for assistance with some frequency. Across disciplines, the most frequently asked experts worked in economics (87 percent), chemistry (81 percent), and engineering, computer sciences, and information technologies (80 percent). Next were noneconomist social scientists (72 percent). Those who reported the fewest requests were in the Academy’s astronomy, physics, and earth sciences cluster (18 percent). Table 1 shows the full breakdown by disciplinary cluster.20 These patterns make sense: experts in disciplines like astronomy are less likely to have expertise relevant to legal matters than experts in economics and chemistry.

Table 1

Academy Scientists Asked for Scientific or Engineering Advice, Requests by Discipline

Q: What is your field of scientific or engineering expertise?

Q: Has a party, attorney, or judge ever asked for your expert scientific or engineering advice?

| Ever asked for advice | |||

Fields of expertise |

Yes % (N) |

No % (N) |

Total % (N) |

| Biological and cognitive sciences | 50.5% (46) | 49.5% (45) | 100% (91) |

| Medical sciences | 61.1% (11) | 38.9% (7) | 100% (18) |

| Astronomy, physics, and earth sciences | 17.8% (8) | 82.2% (37) | 100% (45) |

| Chemistry | 81.0% (17) | 19.0% (4) | 100% (21) |

| Mathematics and statistics | 36.0% (9) | 64.0% (16) | 100% (25) |

| Social sciences except economics | 71.8% (28) | 28.2% (11) | 100% (39) |

| Economics | 86.7% (13) | 13.3% (2) | 100% (15) |

| Social and developmental psychology and education | 57.1% (12) | 42.9% (9) | 100% (21) |

| Engineering, computer sciences, and information technologies | 80.0% (20) | 20.0% (5) | 100% (25) |

| Law, including the practice of law | 35.0% (7) | 65.0% (13) | 100% (20) |

| Total | 53.4% (171) | 46.6% (149) | 100% (320) |

When top experts are approached for assistance, they are likely to agree to provide it, at least on some occasions. In our sample, over 90 percent of those asked for advice agreed to assist at least once.21 That willingness to serve is reflected in respondents’ general agreement with the statement: “Absent strong reasons to the contrary, scientists should share their knowledge with the legal system when they are asked to serve as experts” (84 percent agreed or strongly agreed).22

About 10 percent of those who responded to our main survey never agreed to assist lawyers or judges when asked, while those who agreed to assist on one or more occasions may still turn down other requests. Why do they refuse? We asked respondents to check up to three of thirteen possible reasons for turning down requests, or to identify other reasons for refusing (Table 2). The most common reason for refusing to participate was “timing/other commitments” (66 percent). The demands faced by experts in legal matters can not only be time-consuming, but timing can also be unpredictable. Unlike experts who are full-time consultants or who are employed by the government to provide forensic expertise, professional scientists and engineers in both the academy and industry typically have jobs that make them only sporadically available to assist on legal issues. Strikingly few respondents mentioned formal organizational barriers to participation or advice against participating (6 percent), so it appears that few distinguished scientists are required by their employers’ policies to turn down requests for assistance. Thus, it is time constraints rather than organizational restrictions that create a catch-22 for the legal system: the highest quality scientists have so much on their plates that they may be the least available to assist, even if they would otherwise be willing to do so.

Table 2

Reasons for Turning Down Requests

Q: Thinking back to all the times you turned down requests to serve as an expert, what were your most common reasons for refusing? (Check up to three)

| Reason | N Checked | % Checked |

| Timing/other commitments | 89 | 65.9 |

| Outside my area of expertise | 66 | 48.9 |

| Evidence didn’t favor party asking | 32 | 23.7 |

| Doubts about the legal system (three items) | 31 | 23.0 |

| Particular parties or attorneys (two items) | 28 | 20.7 |

| Wanted my reputation, not my knowledge | 28 | 20.7 |

| Conflict of interest | 15 | 11.1 |

| Fee issues | 10 | 7.4 |

| Advice or institutional policy against (two items) | 8 | 5.9 |

| Other reasons | 6 | 4.4 |

| Total respondents (respondents could check up to three responses) | 135 |

The second most common reason for refusing to participate was that the “request was outside my area of expertise” (49 percent), an appropriate and desirable response since fit matters. The frequency of this response suggests that a system that helps lawyers and judges identify leading experts with knowledge specifically relevant to the issues in a case would increase the efficiency of searches for advice and might promote better expert advice in the legal system. In this connection, we asked those respondents who had provided assistance how, to the best of their knowledge, they had been identified by an attorney or judge as a potential expert.23 Although commercial organizations provide directories of potential experts in various scientific and engineering fields, attorneys, at least according to the respondents, rarely (6 percent) located them by using commercial referral sources. More commonly, respondents said they were identified through their scholarship, or their names were provided by another lawyer, another expert, or the client. It is likely, however, that scientists who are less publicly visible than Academy members and those for whom consulting is their primary professional activity would be more likely to be identified through commercial sources.

The next most common reason for refusal, offered by nearly one in four experts (24 percent) was that they “did not think the scientific or engineering evidence favored the party who wanted my knowledge.” This response is inconsistent with willingness to be a “hired gun,” a charge frequently leveled at expert witnesses. It may reflect the high quality of Academy experts and the fact that they do not need to rely on consulting for a dominant portion of their income. Expert refusals for this reason may have the positive consequence of leading attorneys to reassess the strength of their cases. They may, however, also encourage attorneys to search for more party-friendly experts, whether or not the party-friendly view has adequate scientific justification. Such searches, which can distort the quality or implications of the scientific evidence that finds its way into legal proceedings, are abetted by the absence of rules requiring attorneys to reveal the identities of all experts consulted in connection with a case. Daubert and its progeny should theoretically filter out the worst abuses of this sort, but the Daubert line of cases indicates it is a far from perfect filter.

Time constraints and mismatches are not the only reasons why the legal system loses potentially valuable scientific expert knowledge. Some experts indicated that they refused to assist because they had doubts about the legal system (23 percent). They questioned the ability of the adversary process to resolve science or engineering disputes, doubted whether the legal system could fairly resolve the dispute, or did not relish the prospect of being cross-examined. The majority (84 percent) of the respondents who expressed unease with the legal system had, however, agreed to assist in response to some requests and 68 percent had actually provided assistance. In some cases, their doubts were most likely stoked by their experiences.

Experts also turned down requests because they did not wish to assist particular parties or attorneys (21 percent). One respondent, for example, noted, “I will never work for a patent troll.” To the extent that experts share preferences, some parties may find it difficult to obtain expert assistance.24 An unequal supply of expertise may not undermine the quality of legal decision-making if expert preferences align with scientific merit, but it creates problems if they do not.

Respondents rarely identified fee issues as a reason why they refused requests for assistance (7 percent), although social desirability bias may have discouraged checking this response. It is, however, likely that fees are seldom the deal breaker for these scientists. As responses to this item indicate, other considerations seem to be more important. Not only are distinguished scientists and engineers likely to be able to command substantial compensation, at least in civil cases, but money may not be the principal motivator for the most successful, and typically the most highly paid, academic and industry scientists. Indeed, two respondents who cited fee issues said they refused to participate because “mostly attorneys did not want me to testify unless I would be paid, and I refused” and “[I] do not do this for the fees ever, but pro bono for the common good. Many requests I decline are for a fee which I do not feel appropriate to take.” However, as we discuss below, promised financial compensation is a factor affecting the participation of some experts.

Taken as a whole, responses to our inquiry into why scientists choose not to participate in the legal system present a reassuring picture. Fewer than one in four of those refusing said they did so because of doubts about various aspects of the legal system, and only one respondent gave this as the sole reason for refusing to participate. Most often, the time needed to participate was a major factor (66 percent), and thirteen respondents (10 percent) gave time or organizational policies against participation as their only reasons for refusing. Perhaps most heartening is the degree to which ethical reasons appear to have motivated nonparticipation. These included admitted lack of expertise, feeling that the evidence did not favor the side that sought assistance, conflicts of interest, realizing that the lawyer making the request more highly valued the expert’s reputation than knowledge, and not wanting to work for a particular client or attorney. Overall, 79 percent of our respondents listed at least one of these concerns as a reason for nonparticipation. There is almost no evidence in these data that the kinds of scientists elected to the Academy see themselves as, or are willing to be, “hired guns.”

Participation as a testifying expert often involves a dramatic diversion from the central professional activities of Academy scientists and may be the most demanding role a scientific expert is called upon to play in the legal system. Our sample included ninety-four experts who indicated that in their most recent experience serving as an expert witness, they had testified in a hearing or trial. We asked them to evaluate the importance of various possible reasons for their willingness to participate as an expert in that case (see Table 3).

Table 3

Importance of Reasons for Participating in Most Recent Case

Q: How important were each of the following reasons for your decisions to provide assistance in this case? (from 1 = Not very important to 5 = Extremely important)

| Reasons for Participating (asked of those who indicated they had testified) | Responded with Important or Very Important |

| My expertise could assist in a correct resolution | 85% (68/80) |

| Scientists have an obligation to share knowledge | 64% (47/74) |

| My side was scientifically correct | 86% (64/74) |

| My side was morally correct | 72% (50/69) |

| A learning experience | 46% (33/72) |

| Wanted to affect law or policy | 30% (20/66) |

| Promised financial compensation | 38% (25/66) |

Consistent with a focus on scientific accuracy, the reasons our respondents rated as most important were the ability to assist in correctly resolving the case (85 percent) and the associated belief that the expert was testifying for the side that was scientifically correct (86 percent). Their side’s moral correctness was an important reason for 72 percent of respondents, and more than half of respondents identified the obligation to share knowledge as an important motivation (64 percent). Nearly half (46 percent) said it was important that they thought it would be a learning experience. Only 30 percent said that wanting to affect law or policy was an important motivator.

A substantial minority (38 percent) said they viewed promised financial compensation as an important factor motivating participation. Thus, although we found that experts seldom turned down requests to assist because they regarded the fees they would receive as insufficient, expert fees can be an incentive to participate. One expert explaining his participation commented, “I believe in sharing scientific knowledge and making legal decisions based on scientific knowledge, the cases are interesting, and I like the money.” Another said, “I’ve been doing it for 40 years and overall greatly benefit from the experience. It enhances my research, teaching[,] collections of interesting life experiences, sense of helping the innocent and bank account.” Several others said that in deciding whether to participate they considered both the time required and the level of compensation, with some noting they did not accept assignments when they felt their time would not be fairly compensated. Still others were quite blunt in describing the motivational effects of fees, including respondents who explained their willingness to participate in the future by writing, “compensation,” “pay,” and “[i]nterest, money.” Still, when asked about the most recent case in which they testified, only 38 percent rated financial compensation as an important motivating reason, and most rated at least three other reasons as also important. Only one respondent gave money as the sole important motivation for providing assistance. Thus, although a few scientists refuse compensation for providing assistance, most expect to be compensated and many acknowledge that compensation is a motivator. Nonetheless, their motivations to assist do not appear to be driven solely or in most cases even largely by a profit motive.

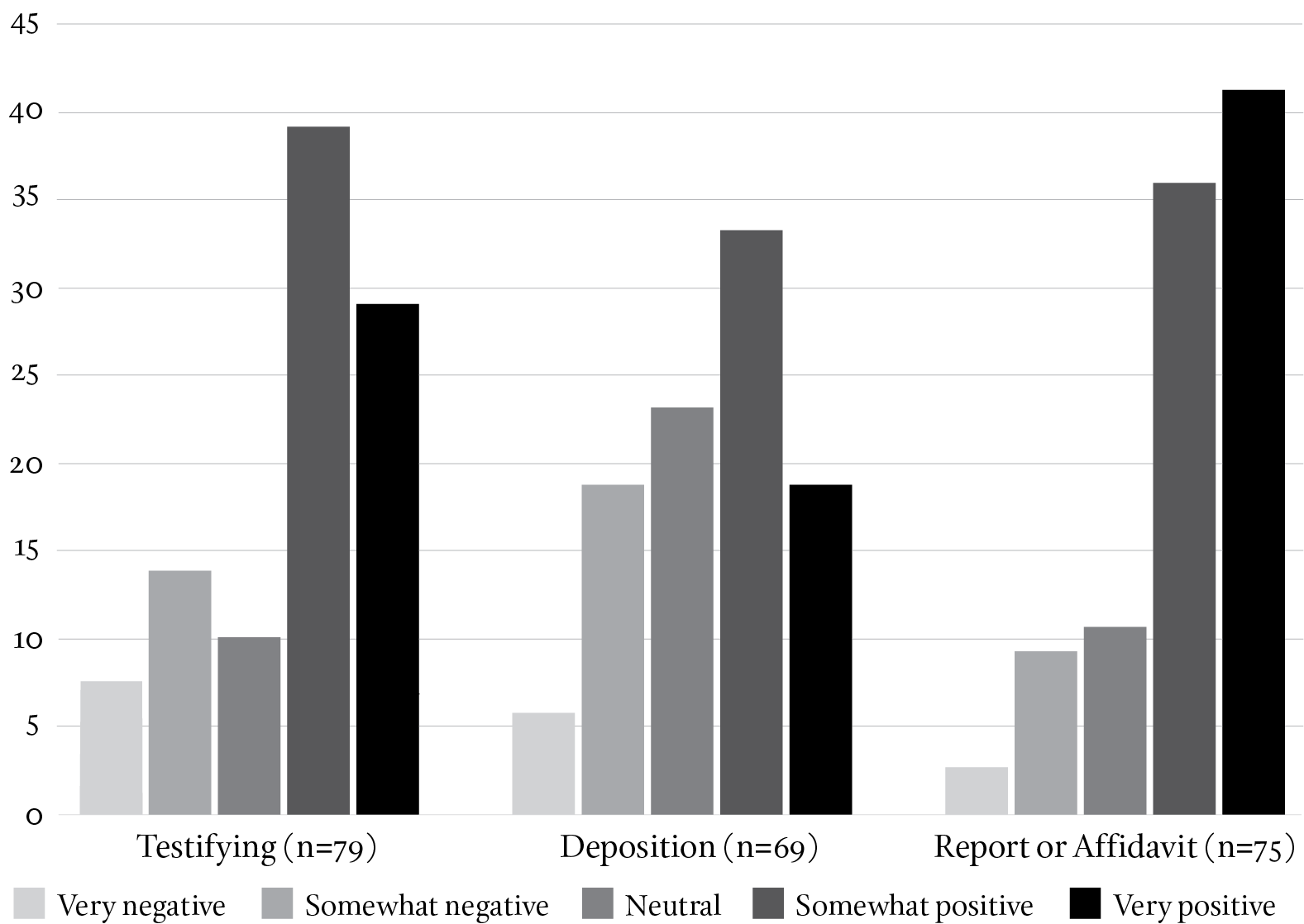

Attorneys may ask the expert scientists and engineers they hire to testify at hearings or trials, to answer questions at depositions, or to write reports or affidavits. Reactions to these activities highlight differences between the legal system’s demands and the way scientists and engineers generally spend their professional time.

Court testimony typically follows a question-and-answer format. Unlike the classroom, where students may ask questions but the professor controls the flow (and number) of student remarks, in a courtroom the attorneys’ questions seek to control how experts present their evidence and opinions. During direct examination, the questions come from the lawyer who hired the expert. This dialogue has typically been rehearsed, often incorporates the expert’s suggestions, and is designed to persuade the judge and/or jury. In contrast, the opposing attorney’s cross-examination typically attempts to constrain what the expert can say, sometimes in ways that will frustrate an expert whose strongest desire is to state the whole truth. The cross-examiner may also challenge not only the accuracy of the expert’s opinions but sometimes the expert’s competence and integrity as well. Not surprisingly, experts think more favorably of direct than cross-examination (81 percent versus 40 percent positive), and they see the lawyer on the side for whom they testified in a more positive light than the opposing side’s lawyer (92 percent versus 31 percent positive).25 Although some trial experiences generated complaints (“The entire process is reminiscent of a high school boy’s locker room where attorneys try to play gottcha and to undermine rather than to reveal, reconcile, and allow the judge or jury to make informed decisions”), 68 percent of the experts who testified at trial rated the overall experience positively, including 29 percent who rated it very positively ( “I enjoyed it – learned a lot – a different world”).

In a deposition, unlike in a trial, only the opposing attorney asks questions, and no judge is present. Moreover, the rules of evidence, including rules of relevance, are relaxed. The expert in a deposition thus lacks the opportunity that a trial presents to educate a neutral decision maker, and is subject to cross-examination without a judicial referee to limit the nature or extent of the questioning. As with the trial experience, experts rated the deposition behavior of the attorney for their side more positively than the behavior of the opposing attorney (78 percent versus 25 percent). Some respondents, given the opportunity to elaborate, showed impatience with the experience (“only fishing expeditions”; “I don’t like having my integrity questioned”). Experts who were deposed were on average less positive about the overall experience than those who testified: 52 percent rated it as positive, including 19 percent who rated it as extremely positive.

Unlike the trial testimony and deposition experience, report writing is familiar territory for scholars. Although an expert report or affidavit in litigation differs in form from that of a scholarly article, the expert in both instances is describing what she believes and the evidence supporting that belief. Experts by and large approved of (81 percent positive) the cooperation they received from the attorney who asked them to write a report. They reported that the attorney was willing to accept their independent view (91 percent) and that their report influenced the attorney’s beliefs about the case (83 percent). Overall, writing a report or affidavit was the part of the litigation assistance process viewed most favorably (77 percent positive, including 41 percent very positive). Figure 1 shows how reactions to these three kinds of involvement differ.26

Figure 1

Expert Evaluations of the Overall Experience: Testifying, Deposition, and Report or Affidavit Writing

What we see reflects the generally positive view that expert participants have of their experience, but it also echoes a distaste for adversary procedures that some experts identified as a reason why they refused to participate on one or more occasions.

We noted earlier that 90 percent of experts who had been asked for assistance had agreed to assist at least once. We also saw that experts often turn down invitations to serve. What about future service? We asked all respondents, “If you are asked in the future to serve as an expert in litigation, how likely is it that you would agree to serve?” One-third of our respondents (34 percent) said they were likely or very likely to serve, and 39 percent said they were uncertain. The remaining 28 percent said they were unlikely or very unlikely. We asked the ninety-five respondents who said they were unlikely to serve to tell us why they would be unlikely to serve. Of the eighty-five individuals who responded to this follow-up question,27 sixteen mentioned being too old or that they had retired, and twenty-two mentioned being too busy, but thirty – one-third of these respondents – mentioned some distasteful reaction to courtroom behavior (“Accurate communication is extremely difficult and generally not desired by either side”; “Litigation sucks”) or the adversary system (“Don’t like being cross-examined”; “Because my experience was that my scientific expertise was not at issue – I was (unfairly) accused of inconsistent behavior”; “The experience of being deposed was horrible”) or the inconsistent demands of science and law (“I am uncomfortable now in the adversarial system in courts dealing with scientific matters”; “Often have difficulty with how scientific facts are distorted in legal proceedings to project what is wanted rather than what is true”). Twelve of the negative responses came from those who reported experience in providing expert assistance, while eighteen came from respondents who had no experience, thus reflecting a combination of responses to prior experience and images of the legal system not based on personal experience that mitigated against participation.28

Although many of these objections and sources of discomfort arise from intrinsic features of the American legal system and some are the legacy of a past unpleasant experience, other perceived problems may be open to adjustment. Thus, we assessed our respondents’ reactions to potential changes in trial procedure that might make participation more attractive to experts. This effort focused on four procedural variations that might affect a respondent’s willingness to participate in a legal proceeding.29

Being asked by a judge to serve as a court-appointed expert (see Daniel Rubinfeld and Joe Cecil’s contribution to this volume) had the most appeal, leading more than two-thirds of the respondents (69 percent) to say that they would be more likely to serve if asked to be a court-appointed expert (Table 4).30 This was particularly true among those who expressed uncertainty about future participation; 77 percent of those respondents said they would be more likely to participate if asked to serve the court rather than a party.31 Moreover, few respondents, whatever their current inclination to serve, said they would be less likely to assist if the request came from a judge (2 percent overall).

Table 4

How Potential Procedural Modifications would Affect Future Willingness to Participate in Light of Current Willingness to Participate

| Current Willingness to Participate in the Future | ||||

| Change in Future Willingness to Participate in Response to Potential Procedural Modifications | Unlikely to Participate in Future 27.8% (n=95) |

Uncertain about Future Participation 38.6% (n=132) |

Likely to Participate in Future 33.6% (n=115) |

Total 100.0% (n=342) |

| If I were asked by a judge or arbitrator to serve as a court-appointed expert rather than by a party as an adversary expert: Would be more likely No effect Would be less likely |

63.6% 34.1% 2.3% 100.0% |

77.2% 22.8% 0.0% 100.0% |

63.2% 32.5% 4.4% 100.1% |

68.7% 29.2% 2.1% 100.0% (n=329) |

| If I were permitted to meet privately with opposing experts to discuss issues and write a joint report indicating areas of agreement and areas of disagreement: Would be more likely No effect Would be less likely |

45.5% 46.6% 8.0% 100.1% |

72.2% 19.8% 7.9% 99.9% |

55.0% 33.3% 11.7% 100.0% |

59.1% 31.7% 9.2% 100.0% (n=325) |

| If I could question opposing experts in court and they could question me: Would be more likely No effect Would be less likely |

25.3% 50.6% 24.2% 100.1% |

33.1% 40.3% 26.6% 100.0% |

37.2% 48.7% 14.2% 100.1% |

32.4% 46.0% 21.6% 100.0% (n=324) |

| If I could answer juror questions after I gave my testimony: Would be more likely No effect Would be less likely |

44.3% 50.0% 5.7% 100.0% |

63.2% 34.4% 2.4% 100.0% |

62.2% 36.9% 0.9% 100.0% |

57.7% 39.5% 2.8% 100.0% (n=324) |

A majority of respondents (59 percent) were also attracted by the idea of meeting privately with opposing experts and writing a joint report that indicated areas of agreement and disagreement. This option was particularly attractive to scientists currently uncertain about their future willingness to serve, leading 72 percent of them to say the change would make them more likely to participate.32 Nonetheless, for some respondents, this change would decrease their willingness to serve (9 percent overall).

These two favored procedural modifications appear likely to diminish the adversarial nature of the expert experience. Court-appointed experts do not have partisan clients, and the opportunity to produce a joint report with the opposing expert potentially avoids or reduces clashes of expertise. The lesser enthusiasm for the third suggested change, permitting opposing experts to question one another in open court, is telling. Overall, less than one-third (32 percent) said it would increase their willingness to serve, and for one in five (22 percent), the change would make them less likely to serve. Even 14 percent of those who identified themselves as currently likely to participate said this procedural modification would make them less likely to serve. Thus, respondents expressed little interest in engaging in attorney-like adversary procedures by questioning and being questioned by an opposing expert. This is not because they reject all questioning. A majority of respondents (58 percent) liked the idea of allowing jurors to pose questions to them and few (3 percent) rejected it, perhaps because the procedure emulates a professor’s availability to answer student questions. Overall, our results suggest that the supply of high-quality expertise can be expanded if the legal system creates procedural options that emulate scientific and academic exchange. Such procedural adjustments would reduce attorney control and may seem inconsistent with the traditional adversary system of the United States, but other common law countries with adversary systems, like Canada and Australia, have taken steps in this direction.33

We have seen that the scientists in this survey often expressed frustration with legal procedures and, in some cases, a suspicion that those procedures were purposefully designed to avoid getting at the truth. How did the scientist-respondents as a whole view the success of the legal system in producing decisions that accord with sound science? Overall, we found that 60 percent of our respondents saw the legal system as very or somewhat successful while 40 percent had the opposite view.34 What explains this division of opinion? One possibility is that experience with the legal system leads to greater familiarity and more positive attitudes. Another is that experience and familiarity engender disappointment and cynicism, evoking more negative attitudes. As a first step, we compared the attitudes of those with and without experience providing advice. Those with experience rated the legal system as significantly more successful, with 70.0 percent of participants seeing the system as somewhat or very successful, while only 53.5 percent of the nonparticipants expressed that favorable view.35 This difference was also reflected in other perceptions and attitudes toward the legal system. Participants rated lawyer understanding of science more favorably than nonparticipants, saw scientists as treated with more respect, and viewed serving as an expert witness more favorably as a way to keep abreast of the real world implications of their science. Participants did, however, express somewhat greater criticism for experts, indicating greater agreement than nonparticipants with the belief that even respected experts may compromise their standards in the context of the legal system (Table 5).

Table 5

Perceptions of and Attitudes toward the Legal System by Participants and Nonparticipants*

| Never Participated (n=201) |

Participated (n=124) |

p-level** | |

| Science should aid lawa | 4.09 | 4.00 | ns |

| Judges can understand scienceb | 2.81 | 2.85 | ns |

| Jurors can understand science | 2.44 | 2.39 | ns |

| Lawyers can understand science | 2.80 | 3.18 | p < .001 |

| Scientists are treated with respectc | 3.14 | 3.43 | p < .002 |

| Experts compromise standardsd | 3.17 | 3.37 | p < .05 |

| Links real world and sciencee | 2.75 | 3.12 | p < .003 |

| Success of legal system with science (% successful)f | 53.5% | 70.0% | p < .002 |

* Scale ranges from 1 (strongly disagree) to 5 (strongly agree)

** “p <” = significant level; ns = not significant at the .05 level

a “Absent strong reasons to the contrary, scientists should share their knowledge with the legal system when they are asked to serve as experts.”

b “In cases where science is important to the decision, most judges and arbitrators have the ability to understand scientific evidence and the scientific process.” The next two items substitute “most juries contain jurors who” and “most lawyers” for “most judges and arbitrators.”

c “Scientists are treated with appropriate respect when they testify at trials or in depositions.”

d “Even respected scientific and engineering experts may compromise their scientific standards and write reports or give testimony [that] better support the position of the party that hired them.”

e “Serving as an expert witness is a good way for scientists to keep abreast of the real world implications of their sciences.”

f “In litigation or arbitration where scientific or engineering issues are involved, on average, how successful do you think the American legal system is in producing results that reflect sound scientific or engineering knowledge?” (percent somewhat or very successful).

Although this overall pattern undercuts the hypothesis that experience tends to undermine confidence in the legal system, we cannot be certain that it promotes it. People may agree to participate because they view the legal system positively (selection effect), their view may be shaped by their participation (experience effect), or both may help explain the correlation.

A modest quasi control group bears on the relative plausibility of the selection and experience effects (Table 6). Thirty-two respondents agreed at least once to participate but never actually participated. We did not ask why their agreement did not result in participation, but given how the litigation process works, we expect the most common reason is that the case was withdrawn or there was a quick settlement or plea agreement. The pattern of responses from this agreed-but-never-participated group was closer to the never-asked group than to the group of participating respondents (Table 6).

The groups differed significantly on four statements in Table 6 (different subscripts indicate significant differences on the post hoc comparisons). In each of these comparisons, the “never asked” and “participated” groups differed from one another. On the evaluation of lawyer understanding, the participated group was distinctive: only participation was associated with an increased evaluation of the ability of lawyers to understand science. This pattern is consistent with an increased appreciation of how well lawyers understand science arising from close interaction. It may also be a biased view of how well lawyers understand science since those lawyers who hired scientific experts and worked with them may be better able to grasp scientific concepts than the general run of attorneys.

Table 6

Perceptions of and Attitudes toward the Legal System with Quasi Control Group*

| Never asked (n=152) |

Participated (n=124) |

Asked and agreed but did not participate (n=32) |

Overall p-level** | |

| Science should aid law | 4.14 | 4.00 | 3.97 | ns |

| Judges can understand science | 2.80 | 2.85 | 2.94 | ns |

| Jurors can understand science | 2.42 | 2.39 | 2.41 | ns |

| Lawyers can understand science | 2.82a | 3.18b | 2.75a | p < .005 |

| Scientists are treated with respect | 3.15a | 3.43b | 3.24ab | p < .01 |

| Experts compromise standards | 3.18 | 3.37 | 3.10 | ns |

| Links real world and science | 2.72a | 3.12b | 2.90ab | p < .007 |

| Success of legal system with science | 52.5%a | 70.0%b | 51.6%a | p < .01 |

* Scale ranges from 1 (strongly disagree) to 5 (strongly agree)

** “p <” = significant level; ns = not significant at the .05 level

Note: Subscripts indicate significant differences on the post hoc comparisons.

Most important, we compared the groups on their views about the success of the legal system in dealing with scientific matters. Again, the participants viewed the legal system as more successful (70.0 percent) than both those never asked (52.5 percent) and those who agreed but did not have an opportunity to participate (51.6 percent). The pattern is only suggestive in light of the small number of quasi control respondents and the unknown reasons why they did not end up participating. Nevertheless, we provided an opportunity to support the possibility that our results were the result of preexisting views of the legal system, and the data fell in the opposite direction.

This survey provides unique information about how scientists interact with and view the legal system. There are aspects of our data that we have yet to plumb, but even after further analysis, we must be careful in generalizing from our results: The findings we report may characterize only, or largely, the kinds of scientists who achieve substantial success in their fields. We do not know how scientists who market themselves as scientific experts, including scientists who work for consulting firms or the large group of forensic scientists who testify regularly for the prosecution, would answer the questions we posed.36 Also, given the age and accomplishments of Academy members who are scientists, we cannot be certain how the generation of scientists now entering the most productive portions of their careers view the legal system or would respond to proposed changes in legal procedure. Nevertheless, the snapshot we provide of the group of eminent scientists who responded to our survey is an important one. Our respondents have expertise that is crucial for a legal system that must increasingly take account of scientific understandings and will be well served only if the science available to it is both clear and sound.

In this respect, the good news is that the Academy survey reveals that the legal system has often been able to draw on distinguished scientists and engineers for assistance when scientific and engineering questions intersect with the law. This capacity can be expected to continue into the future. When asked, most scientific experts are willing to participate in legal actions, at least some of the time. Still, the relationship has its trouble spots, including some discomfort with the adversary system, that seem to reflect the different cultural norms of science and law. Although our survey responses suggest that several modest changes in trial procedures could have positive effects for both experts and triers of fact, as other essays in this volume indicate, tensions between science and the law are unlikely to ever completely disappear.

AUTHOR’S NOTE

Our thanks to Beth Murphy, American Bar Foundation, and Eleanor Wilking, Northwestern University Pritzker School of Law, for their excellent assistance. We are grateful to the American Academy of Arts and Sciences and to the American Bar Foundation for funding support. We are also grateful for valuable feedback from the authors of the other essays in this volume who were participants in the American Academy of Arts and Sciences meeting.

ENDNOTES

1 The tryal of Spencer Cowper, Esq, John Marson, Ellis Stevens, and William Rogers, gent. upon an indictment for the murther of Mrs. Sarah Stout, a Quaker before Mr. Baron Hatsell, at Hertford assizes, July 18, 1699, 45. The judge went further: “Dr. Brown has a learned discourse in his Vulgar Errors upon this subject, concerning the floating of dead bodies, I don’t understand it my self, but he hath a whole chapter about it.” Ibid., 16.

2 Stephen Breyer, “The Interdependence of Science and Law,” Science 280 (5363) (1998): 537.

3 Daubert v. Merrell Dow Pharmaceuticals, Inc., 509 U.S. 579 (1993).

4 Daubert v. Merrell Dow Pharmaceuticals, Inc., 43 F.3d 1311, 1316 (9th Cir. 1995), cert. denied, 116 S.Ct. 189 (1995).

5 Sophia I. Gatowski, Shirley A. Dobbin, James T. Richardson, et al., “Asking the Gatekeepers: A National Survey of Judges on Judging Expert Evidence in a Post-Daubert World,” Law & Human Behavior 25 (5) (2001): 433; and John Meixner and Shari Seidman Diamond, “The Hidden Daubert Factor: How Judges Use Error Rates in Assessing Scientific Evidence,” Wisconsin Law Review 2014 (6) (2014): 1063.

6 Andre A. Moenssens, “Ethics: Codes of Conduct for Expert Witnesses,” in Wiley Encyclopedia of Forensic Science, ed. Allan Jamieson and Andre A. Moenessens (Hoboken, N.J.: Wiley-Blackwell, 2016).

7 American Psychological Association, “Ethical Principles of Psychologists and Code of Conduct” [amended February 20, 2010], American Psychologist 57 (2002): 1060–1073.

8 National Research Council, Strengthening Forensic Science in the United States: A Path Forward (Washington, D.C.: National Academies Press, 2009). The federal government responded to the NAS report on the state of the forensic sciences first with the formation of an interagency task force to consider the report’s critiques and recommendations, and then with the establishment of the National Commission on Forensic Sciences, a distinguished panel of academics, judges, prosecutors, forensic scientists, and defense counsel, charged with assessing and ensuring the scientific integrity of the various forensic sciences. The Commission was, however, terminated after President Trump took office. Still ongoing, as we write, is a National Institute of Standards and Technology – Department of Justice effort in which representatives of the various forensic sciences are working to establish performance and communication standards for their disciplines.

9 National Research Council, A Convergence of Science and Law (Washington, D.C.: National Academies Press, 2001). The concern of courts and the legal academy is not that lawyers cannot find experts. Rather, the concern is with the pool of available experts, their biases and scientific competence, and their effectiveness in communicating their scientific opinions fairly and clearly in court. Hence the Council’s concern is with factors that discourage the most able scientists from lending their expertise to the courts.

10 A two-part survey: Anthony Champagne, Daniel Shuman, and Elizabeth Whitaker, “An Empirical Examination of the Use of Expert Witnesses in American Courts,” Jurimetrics Journal 31 (4) (1991): 375; and Daniel W. Shuman, Elizabeth Whitaker, and Anthony Champagne, “An Empirical Examination of the Use of Expert Witnesses in the Courts – Part II: A Three City Study,” Jurimetrics Journal 34 (2) (1994): 193. See also Jonathan Baker and M. Howard Morse, Final Report of Economic Evidence Task Force, Task Force on Economic Evidence (Chicago: American Bar Association, 2006), Appendix II.

11 Michael J. Saks and Richard Van Duizend, The Use of Scientific Evidence in Litigation (Williamsburg, Va.: National Center for State Courts, 1983).

12 Stephen E. Fienberg, ed., The Evolving Role of Statistical Assessments as Evidence in the Courts (New York: Springer, 1989). Joseph B. Kadane and Caroline Mitchell, “Statistics in Proof of Discrimination Cases” and Richard O. Lempert, “Befuddled Judges: Statistical Evidence in Title VII Cases” in Legacies of the 1964 Civil Rights Act, ed. Bernard Grofman (Charlottesville: University of Virginia Press, 2000), 241 – 262 and 263 – 281, respectively, both analyze court responses to expert evidence in the same seventeen cases.

13 We developed the survey with assistance from John Randell and Keerthi Shetty at the American Academy. We revised our draft and improved it substantially following conference calls with scientists, engineers, and legal scholars who had reviewed the original draft of the survey, as well as with the benefit of a conversation with Justice Stephen Breyer (December 2015 – January 2016). Robert Townsend of the American Academy formatted and distributed the online survey in two waves (April 2016 and September 2016).

14 This group also included sixty Academy members from Class V (public affairs, business, and administration) whose substantive expertise was in science or engineering.

15 Nathaniel Hafer, Cheryl J. Vos, Karen McAllister, et al., “How Scientists View Law Enforcement,” Science Progress, February 2009. (The American Association for the Advancement of Science and the American Academy of Arts and Sciences share the AAAS acronym, but are different organizations with different memberships. The Association in the Hafer et al. survey is a voluntary subscription organization, while the Academy Fellows we surveyed were scientists nominated by their peers and voted into the Academy based on their professional accomplishments.) See also Brian J. Love, “Do University Patents Pay Off? Evidence from a Survey of University Inventors in Computer Science and Electrical Engineering,” Yale Journal of Law and Technology 16 (2) (2014): 285, 299 [reporting an 11.3 percent response rate].

16 Chi-squared = 9.16, p < .003. The higher response rate for women is not unusual: see, for example, William G. Smith, Does Gender Influence Online Survey Participation? A Record-Linkage Analysis of University Faculty Online Survey Response Behavior (San José, Calif.: San José State University, 2008); it also occurred in the follow-up sample (23 percent women).

17 Chi-squared = 8.14, p < .005. Although the mean age in the follow-up survey was seventy-one, the distribution of respondents was somewhat closer to the population, with 74 percent aged sixty-five or older.

18 In contrast, Class III members were somewhat underrepresented in the follow-up survey (26 percent), so that together the two surveys had representation from Class III that was similar to the population (30 percent versus 28 percent). The other two classes had nearly identical representation in the population, first, and second surveys (Class I: 37.5 percent, 35.5 percent, 39.1 percent; Class II: 34.1 percent, 31.1 percent, 34.8 percent).

19 Sixty percent in the follow-up survey reported they had been asked at least once, 33 percent at least three times, and 12 percent ten or more times.

20 These percentages are based on disciplinary categories with at least ten respondents. We did not obtain disciplinary information in the follow-up survey.

21 In the follow-up survey, 84 percent of respondents agreed to assist at least once. Accepted invitations did not always result in participation: 20 percent of those in the original sample and 4 percent in the follow-up survey who agreed did not end up participating.

22 Respondents could also answer that they were undecided, disagreed, or strongly disagreed. Agreement with some other statements was considerably less; for instance, agreement rates with assertions that most judges and arbitrators (30 percent), jurors (12 percent), and lawyers (39 percent) have the ability to understand scientific evidence and the scientific process.

23 Respondents were asked to choose all applicable sources from the following list: my scholarship (77 percent); an expert referral organization (6 percent); a referral from another lawyer (21 percent); name provided by the client (23 percent); recommended by another expert (22 percent); don’t know (7 percent).

24 Joseph Sanders, Betty Rankin-Widgeon, Debra Kalmuss, and Mark Chesler, “The Relevance of ‘Irrelevant’ Testimony: Why Lawyers Use Social Science Experts in School Desegregation Cases,” Law and Society Review 16 (3) (1981–1982): 403 [plaintiff lawyers appear to have easier access to a network of scholars willing to testify].

25 Respondents rated various features of the trial, deposition, or report/affidavit on a 5-point scale: very negative, somewhat negative, neutral, somewhat positive, very positive.

26 A subset of thirty-five respondents provided all three ratings because their most recent experience had required a report, a deposition, and testimony. The pattern for this subset mirrored the results in Figure 1.

27 Two of those who said they were unlikely to serve were federal judges and eight who said they were unlikely to serve did not indicate why.

28 The third and seventh quotes in the text came from respondents without experience; the remaining came from respondents with prior experience.

29 For each potential change, respondents were asked: If [change was made], I would definitely be more likely to participate; I would probably be more likely to participate; It would have no effect on my decision whether to participate; I would probably be less likely to participate; I would definitely be less likely to participate.

30 Daniel L. Rubinfeld and Joe S. Cecil, “Scientists as Experts Serving the Court,” Dædalus 147 (4) (Fall 2018).

31 Chi-squared4 = 10.51, p < .04.

32 Chi-squared4 = 19.74, p < .001.

33 See Ian Freckelton QC, Jane Goodman-Delahunty, Jacqueline Horan, and Blake McKimmie, Expert Evidence and Criminal Jury Trials (Oxford: Oxford University Press, 2016), chap. 3.

34 Respondents were asked to rate the legal system’s success by choosing among four options: very successful, somewhat successful, somewhat unsuccessful, very unsuccessful.

35 Chi-squared = 8.31, p < .004. This pattern was replicated in the follow-up survey. Sixty-eight percent of participants viewed the legal system as successful, while 61 percent of the nonparticipants did; Chi-squared for the combined samples = 9.15, p < .002. Neither age nor gender was associated with this view. A majority of all three Academy classes viewed the system as successful, although Class II members (biological sciences) were least positive (55.8 percent), Class I members (mathematical and physical sciences) were more positive, and Class III (social sciences) were most positive (67.9 percent) (Chi-squared2 = 5.36, p < .07). Nonetheless, within each class, those who had participated as experts in the legal system were more likely to view the legal system as successful than those who had not. When age, gender, Academy class, and participation are used to predict judged success, only participation is a significant predictor (Wald = 7.09, p < .01).

36 Among those respondents who said they had provided assistance, most (84 percent) said they had assisted primarily in civil cases, 11 percent primarily in criminal cases, and 5 percent in both about equally. Assistance in civil cases was fairly evenly divided, with 33 percent primarily assisting plaintiffs, 27 percent primarily assisting defendants, and 40 percent assisting both about equally. Among the small group of 14 respondents who reported experience assisting in criminal cases, half (7) primarily assisted the defense, 4 primarily the prosecution, and 3 both sides about equally. The Academy sample thus included little if any representation from the large cadre of government-employed forensic scientists who regularly appear in criminal court cases.