On November 9, 2011, Daniel Kahneman was awarded the Talcott Parsons Prize by the American Academy for his pioneering research in behavioral economics. The award, presented at a ceremony in Cambridge, honors outstanding contributions to the social sciences. At the award ceremony, Kahneman spoke on “Two Systems in the Mind.” An edited transcript of his presentation follows.

Daniel Kahneman

Daniel Kahneman, recipient of the American Academy’s 2011 Talcott Parsons Prize, is a Senior Scholar and the Eugene Higgins Professor of Psycholog y, Emeritus, and Professor of Psycholog y and Public Affairs, Emeritus, at Princeton University. He has been a Fellow of the American Academy since 1993.

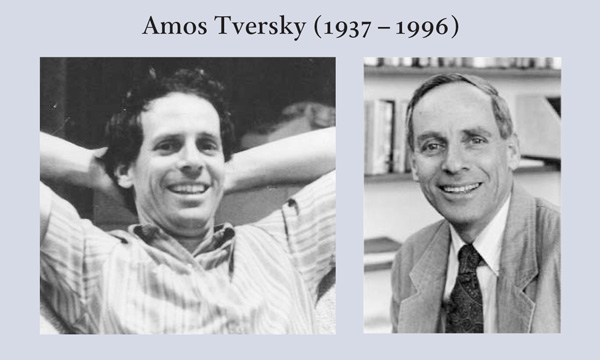

The work for which I am being honored was done in collaboration with Amos Tversky (see photographs below); he and I had a lot of fun studying judgment and decision-making together. For fifteen years, I had the exceptional joy of being part-owner of a mind that was much better than my mind, and I think Amos felt the same way. We somehow were better together than we were singly.

The research that we did was essentially introspective. We certainly collected data, but that was almost incidental. Our major method was simply to spend many hours together every day, generating puzzles for each other. What we were looking for were cases in which we knew the answer to a puzzle, but our intuition wanted to say something else. That is, we were looking for counter-intuitive ideas in our own thinking, and we devised many problems in which the intuitive answer is wrong.

To give you a sense of how that works, consider this example: Steve, who is a meek and tidy soul, has a need for order and structure and a passion for detail. Is he more likely to be a librarian or a farmer? Bearing the description of Steve in mind, your intuition tells you that he resembles a librarian much more than a farmer. That resemblance is immediately transformed into a judgment of probability. This process happens to most people, and it happens very quickly and quite robustly.

In many cases, people can easily solve problems correctly when presented with two versions. For example, how much would you pay for a cold cut of meat that is 90 percent fat free, and how much would you pay for cold cuts that are 10 percent fat? When those two problems are shown together, people see that they are identical. Viewed separately, however, they are not identical: people will pay more for 90 percent fat free than for 10 percent fat. There is an immediate intuitive reaction to each description, an emotional reaction that is translated into the price that people are willing to pay.

Such self-contained and very short examples were the key to the cross-disciplinary impact of our work. This feature was largely incidental; we presented the problems as part of the text so that people would read the examples and relate them to their own experience. I think that if we had presented the data only in the manner in which psychological data are conventionally presented, it would have had very little impact. But because we included such relatable examples, people outside the discipline could appreciate that yes, this is something that they had not suspected about their own thinking.

| Figure 1 |

|

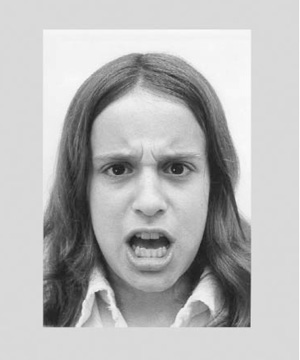

The examples make us keenly aware of two kinds of thinking. There is intuition, and then there is computation, or reasoning. The very first study that Amos and I did together was of the statistical intuitions of statisticians – that is, people who were quite versed in statistics – and we demonstrated that their intuitions were indeed flawed. The contrast between intuition and reasoning has long been known, but in the past twenty years, it has attracted considerable attention. In psychology, we now speak of two types of thinking. Figure 1 reveals one way that thoughts come to mind. The lady in the photograph is angry, and you know that she is angry as soon as you see her – as quickly as you know that her hair is dark. The important aspect of the experience is that it is something that happens to you, it is not something that you do. You do not decide to make a judgment of this person. You just perceive her. The ancient Greeks described seeing the world as largely a passive experience. In the same way, intuitive ideas come to mind unbidden, on their own. When you look at this picture, notice also that perception involves an element of prediction. You already know something about what the woman will sound like, and you know something about the general character of the next thing that she will say.

A simple mathematical problem demonstrates another way that thoughts come to mind. When first faced with the problem of, say, 24 x 17, probably nothing comes to mind. In order to generate the answer, you have to do something entirely different. You have to bring up a program that you learned in elementary school, and then you have to complete a series of steps, all the while remembering the partial products and what to do next. This is not something that happens to you, it is something you do. Now, 2 + 2 = 4 happens to you, but 17 x 24 = 408 is something you have to do. The experience that we have in solving the problem makes us the authors of the product; there is a sense of agency and will. Performing this action requires focused attention.

Furthermore, it is effortful, and there are several ways of measuring effort. One is physiological: the area of the pupil of the eye will dilate by approximately 50 percent; heart rate will increase; and many other changes will occur while a person is engaged in solving that problem. More important, the fact that this computation is effortful means that you cannot carry it out while doing something else that is demanding. Very few people can– and no one should try – to complete that computation while making a left turn into traffic. Attention is a limited resource, and the amount required to perform the computation leaves very little to perform other tasks. If there is a priority, such as making a left turn into traffic, you will stop the computation. Everyone has an executive control that allocates attention to different tasks. It determines when attention is required for some operations and not for others.

Psychologists have had much to say about the two types of mental operations. One is automatic, experienced passively and usually rapidly. We have called it “fast thinking.” The other is effortful, deliberate, demanding of attention. Automaticity is the defining feature of fast thinking, or Type 1 thinking. Effort and deliberate attention are the main characteristics of Type 2 processes.

I have adopted a different terminology: I speak of System 1 and System 2. I want to apologize for using this terminology because it is considered almost sinful in the circles in which I travel. System 1 and System 2 are fictitious characters; they do not exist as systems or have a distinctive home in the brain. Yet, I think these terms are very useful. To explain my choice, I turn to the book Moonwalking with Einstein (2011). In the story, author Joshua Foer, who is the brother of writer Jonathan Safran Foer and is himself a science writer, undergoes memory training and, a year later, becomes the Memory Champion of the United States. He can memorize decks of cards in a couple of minutes and perform many other feats of memory that most people would consider – and that he, himself, had considered – incredible. What makes this kind of accomplishment possible?

It turns out that the human mind and human memory are much better at some tasks than others. Evolution has shaped our brains so that there are tasks we do easily and others we don’t do easily. In particular, we are terrible at remembering lists, but we are very good at remembering routes through space. If you want to remember a list, you must imagine a familiar route and mentally distribute the items in the list around that route. Then you can find these items when you need them. This is basically how people memorize decks of cards and perform other miracles of memory.

We are also not very good at understanding sentences that have abstract subjects, but we are very good at thinking about agents. Agents can be people or other things that act. We can assign actions to them, remember what they do, and, in some sense, remember why they do it. We form a global image of agents.

My choice of terms is considered a sin because we are not supposed to explain the behavior of the mind by invoking smaller minds within the mind. The reliance on homunculi is a terrible thing to do if you are a psychologist. Nevertheless, I will speak of System 1 and System 2 because I think it is easier for people–myself included–to think about systems than to think about the more abstract Type 1 and Type 2. We can always translate any statement about System 1 into Type 1 characteristics. For example, we can say that System 1 generates emotions; in Type 1 terms, we would say that emotions arise automatically, effortlessly, and relatively quickly when the appropriate stimulus arises. But it is often simpler to speak of the characteristics of System 1 and System 2.

System 2 performs complex computations and intentional actions, mental as well as physical. It is useful to think of System 2 as the executive control of what we think and what we do. That turns out to be a difficult task; controlling ourselves demands effort. We know that self-control is impaired when we are engaged in the effort of doing other things. For example, if you ask people to remember seven digits, and then to perform other tasks while keeping those seven digits in mind, they will behave differently than they would if they were not trying to remember seven digits. Given a choice between sinful chocolate cake and virtuous fruit salad, they are more likely to choose the chocolate cake if they are trying to remember the seven digits because the effort impairs self-control. Self-control is part of the limited resources system, and we can deplete the limited resources system so that after someone has tried for ten minutes to watch an emotional film while keeping a straight face, the ability to perform a hand-grip task is weakened. The person is less able to perform the act of will that is needed to make a powerful hand grip.

Now that I have introduced you to the two systems, I will tell you a few things about System 1. Most of the information we have about System 1 was not available when Amos and I did our work. When you put fairly recent psychological research together with what we knew, things begin to make more sense.

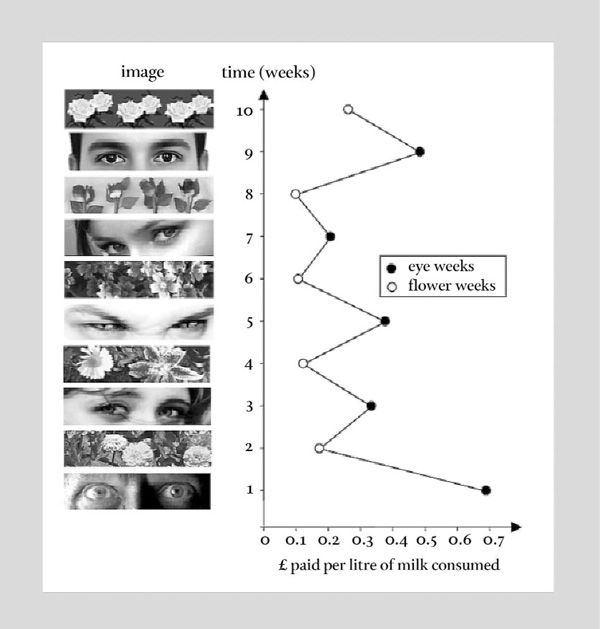

Let me give you an example of the new research. One study was done in a U.K. laboratory where, as is often the case in U.K. laboratories, there was a little room where people could help themselves to coffee, tea, biscuits, and milk. There was an honesty box into which people deposited money. Someone had the idea to put a poster on top of the honesty box that would change from week to week (see Figure 2). In the first week, the poster featured two gigantic eyes (see the bottom of Figure 2), and people contributed about 70 pence. The second week, the poster was of flowers, and the contributions fell to less than 20 pence. The third week, it was eyes again, and contributions rose; the fourth week, it was flowers, and contributions fell. This pattern, which continued over several weeks, is a very large and completely mysterious effect insofar as the people contributing to the honesty box are concerned. They barely knew the posters were there; they had no idea what was happening to them. Their unconscious actions were the result of operations within the associative system. That is, we make a powerful association between eyes and being watched, between eyes and morality, and between eyes and behaving well. The significant effect that this association can have on behavior operates without people being aware of it. We learn from this experiment and many others like it that symbolic connection in associative memory can control behavior.

| Figure 2 |

|

System 1 is not only responsible for emotions, but also for skillful behavior. We have what we call intuitive expertise, evident in chess masters who can see a situation and say, “white mates in three,” or in physicians who can diagnose a disease at a glance. Highly skilled responses become automatic and therefore have the characteristics of System 1 activities. Here again, the skilled solutions are experienced as if they came to mind by themselves. All the moves that come to the mind of a master chess player are strong moves, and the diagnoses that occur spontaneously to a very experienced physician tend to be correct, so correct intuitions are part of System 1. System 1 is a repository of the knowledge that we have about the world, and its performance is extraordinary.

For instance, there is a study in which people listen to a series of spoken sentences while the events in their brains are recorded. At some point, an upper-class British male voice says, “I have large tattoos all down my back.” Within approximately three-tenths of a second of hearing the sentence, the brain responds with a characteristic signature of surprise. An incongruity has been detected. Probably all of you detected it from my description at about that speed. You have to recognize that the voice is upper-class British male. Somehow, you have to remember or make the connection that an upper-class British male probably does not have tattoos down his back. The conjunction is surprising, and the brain responds with surprise. In our terms, System 1 would detect the abnormality, then activate System 2 to process the incongruity in greater depth. World knowledge is built into this process.

I will give you an experience of this phenomenon, though you will not enjoy it. In the last twenty years, we have learned that something happens to anyone who sees the two words banana and vomit together. First, you read the words automatically. That is, you did not decide to read the words; in fact, you had no choice: this is a System 1 activity. Second, unpleasant memories and images came to your mind. Third, there was a physical reaction: you recoiled. Everyone who has been exposed to such words – to threat words – has recoiled. The effect is slight, but it is measurable. You made a disgusted face; you felt disgust. Interestingly, many of the changes that occur, all of which happen very quickly, tend to reinforce each other. The emotion of disgust makes you produce a disgusted face. Making a disgusted face reinforces the feeling of disgust. We know that making people shape their face in particular ways has an effect on their emotions. For example, if people hold a pencil horizontally between their lips, they find cartoons funnier, because holding a pencil like this forces your face into a slight replica of a smile, and that makes things funnier. Putting a pencil the other way makes you frown, and you find cartoons less funny. So what emerges from this reaction is a coherent pattern of activation.

Having seen the two words, you are prepared to see other words that belong to the same context, so that if you were listening to words spoken in a whisper, you would find it easier than usual to recognize smell, hangover, nausea, and many other associated words. In a sense, you are prepared for them. A number of physiological changes also occur, indicating that you are generally more vigilant because the stimulus is threatening.

Finally, there is the word banana. Nothing suggests that bananas caused the illness, but that connection was made. The associative memory automatically searches for a causal explanation. Bananas are available for a cause, so for a short while you might stay away from bananas because they appear to have caused illness. All of this happens automatically and is a characteristic of how System 1 works. Our associative system is a huge network of ideas. Any stimulus or situation activates a small subset of those ideas. Activation spreads so that you are now prepared for other ideas, although they do not come consciously to mind. An important feature of this process is that it is highly context-dependent.

| Figure 3 |

|

| Figure 4 |

|

System 1 generates stories, and they tend to be coherent stories in response to stimuli. What I mean by a story is the causal connection that people search for automatically. I do not have time to tell you about the many experiments showing this process, but I can demonstrate the most important aspect: namely, the coherent solution that is imposed. Figure 3 is a familiar demonstration from psychology in the context of perception. You read the first series of characters as “A, B, C,” the second as “12, 13, 14.” Of course, as Figure 4 reveals, the B and the 13 are physically identical. In the context of letters, the same figure is read as a letter that in the context of numbers is read as a number. When you take the context into account in interpreting any part of the situation, the ambiguity is suppressed. You are not aware when you see the B that it could just as well be a 13. The suppression of ambiguity is a general feature of System 1. So we generate coherent stories and solutions to problems. They come to mind very easily, and we are not aware that things could be otherwise. I could say much more about System 1, but I will add one key idea.

A remarkable feature of our thinking is our expertise. It is not only chess masters who have expertise. We have expertise in driving. I have expertise in recognizing my wife’s mood from the first word on the telephone, and I am certainly not alone in that proficiency. But there are questions in which we do not have expertise, and which are quite difficult to answer. But System 1 generates answers to those impossible questions, such as, how happy are you? Or, what is the probability that President Obama will be reelected? We have many answers to impossible questions, and they arise very quickly in our minds. Analysis shows that in order to answer a difficult question, we answer a related, easier question. The substitution of an easy question for a hard one is the mechanism of what Amos and I labeled judgment by heuristics. Some heuristics are applied deliberately, but many are applied automatically. One example is buying travel insurance.

The particular study I am about to discuss was carried out at a time when terror incidents were affecting Europe. Some people were asked how much they would pay for insurance that pays $100,000 in case of death for any reason. Other people were instead asked how much they would pay for insurance that pays $100,000 in case of death in a terror incident. The study showed that people would pay much more for the second policy than for the first. If they had seen both problems together, they likely would not have offered to pay more for one policy than for the other, but they saw only one problem. Deciding how much you would pay for insurance is very difficult. But you do know how afraid you are. The paradoxical pattern of willingness to pay reflects the fact that people are more afraid of dying in a terror incident than of dying for any reason. Fear does not obey the logic of inclusion, and responses that are based on fear do not obey the rules of inclusion. This is how we violate logic. This is why the heuristics of judgment generate biases and errors.

I will end with a quick demonstration. I will tell you about Julie, a young woman who is a graduating senior at a university. I will tell you one fact about her: that she read fluently when she was four years old. Now, I will ask, what is her GPA? Oddly enough, you all know to some extent what her GPA is. It came to your mind very quickly; it is more than 3.2, less than 4.0, and probably somewhere between 3.6 and 3.7, depending on how much grade inflation there is at the institution you have in mind. But we know the mechanism of how this happens. When I tell you about someone who read fluently at age four, you have an impression of how precocious she was. Then, when I ask you what her GPA is, you generate an answer that is about as extreme as your initial impression of the precocity of a child who reads fluently at age four. This is a ridiculous way of answering the question because it violates every principle of statistics, but that is the way our intuition works: we substitute an easy question for a hard one.

There is much more to be said about System 1. I have just written a book about it, but I won’t give away the entire book.

© 2012 by Daniel Kahneman

Daniel Kahneman, Thinking, Fast and Slow (New York: Farrar, Straus and Giroux, 2o11)

To view or listen to the presentation, visit https://www.amacad.org/content/events/events.aspx?d=486.