Chapter 2: The Use of fMRI in Lie Detection: What Has Been Shown and What Has Not

Nancy Kanwisher

Can you tell what somebody is thinking just by looking at magnetic resonance imaging (MRI) data from their brain?1 My colleagues and I have shown that a part of the brain we call the “fusiform face area” is most active when a person looks at faces (Kanwisher et al. 1997). A separate part of the brain is most active when a person looks at images of places (Epstein and Kanwisher 1998). People can selectively activate these regions during mental imagery. If a subject closes her eyes while in an MRI scanner and vividly imagines a group of faces, she turns on the fusiform face area. If the same subject vividly imagines a group of places, she turns on the place area. When my colleagues and I first got these results, we wondered how far we could push them. Could we tell just by looking at the fMRI data what someone was thinking? We decided to run an experiment to determine whether we could tell in a single trial whether a subject was imagining a face or a place (O’Craven and Kanwisher 2000).

My collaborator Kathy O’Craven scanned the subjects, and once every twelve seconds said the name of a famous person or a familiar place. The subject was instructed to form a vivid mental image of that person or place. After twelve seconds Kathy would say, in random order, the name of another person or place. She then gave me the fMRI data from each subject’s face and place areas.

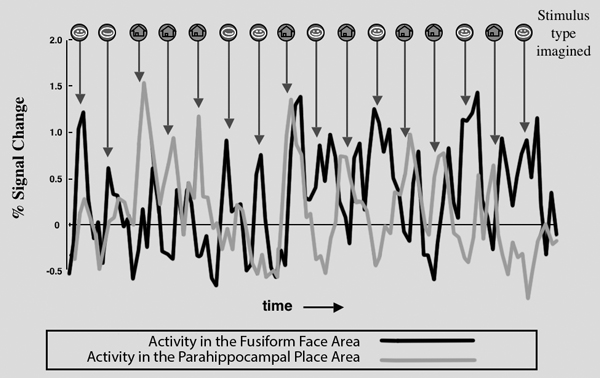

Figure 1 shows the data from one subject. The x-axis shows time, and the y-axis shows the magnitude of response in the face area (black) and the place area (gray). The arrows indicate the times at which instructions were given to the subject. My job was to look at these data and determine for each trial whether the subject was imagining a face or a place. Just by eyeballing the data, I correctly determined in over 80 percent of the trials whether the subject was imagining faces or places. I worried for a long time before we published these data that people might think we could use an MRI to read their minds. Would they not realize the results obtained in my experiment were for a specific, constrained situation? That we used faces and places because we know which highly specific parts of the brain process those two categories? That we selected only cooperative subjects who were good mental imagers? And so on. I thought, “Surely, no one would try to use fMRI to figure out what somebody else was thinking!”

Figure 1. Time course of fMRI response in the fusiform face area and parahippocampal place area of one subject over a segment of a single scan, showing the fMRI correlates of single unaveraged mental events. Each black arrow indicates a single trial in which the subject was asked to imagine a specific person (indicated by face icon) or place (indicated by house icon). Visual inspection of the time courses allowed 83 percent correct determination of whether the subject was imagining a face or a place. Source: O’Craven and Kanwisher 2000.

My concern proved to be premature. Almost no one cited this work for several years. In the past couple of years, however, our findings have been more widely discussed—for example, in Time Magazine, on NPR, and in the New York Times Magazine (though oddly these venues still fail to cite our paper when describing our results)—and at least two companies, Cephos Corp. and No Lie MRI, have begun marketing fMRI “lie-detection” services. The Cephos website says, “Lying is shown to activate specific, discrete parts of the brain. We can use those regions to determine if a person is lying with a high degree of accuracy. No activation is seen when telling the truth.” The No Lie MRI website includes a product overview that boasts, “Current accuracy is over 90% and is estimated to be 99% once product development is complete” (No Lie MRI 2006).

Does any real science lie behind these claims? The literature on using fMRI for lie detection can be divided into two groups (see Figure 2). The first uses group analysis, and the second focuses on individual subject data. In group analysis, subjects’ brain scans are aligned as closely as possible. The data are then averaged and analyzed as a group. Group studies can be useful for identifying patterns of brain response that are consistent across subjects. Group studies are not useful for determining whether a particular subject is lying. Studies that analyze individual subject data are relevant for trying to determine whether fMRI is useful for lie detection, so we discuss these findings in turn.

I. Group Studies

|

Figure 2. List of empirical studies of fMRI lie detection published in peer-reviewed journals as of January 2007.

STUDY 1

Kozel and colleagues (2005) instructed subjects to “steal” either a watch or a ring and to place the “stolen” item with their belongings in a locker while being watched by one of the investigators. They were then scanned with fMRI while answering a set of questions presented in a random order. For example, they might be asked, “Did you take the watch from the drawer?” Or, “Is the ring in your locker?” There were twenty roughly synonymous versions of each question, as well as a variety of “neutral” conditions; for example, “Do you like to swim?” Subjects were instructed to answer questions about the ring or watch as if they had stolen neither object and to answer the other questions truthfully. Thus, for the ring condition subjects were “lying” and for the watch condition they were “telling the truth,” or vice versa. Participants were also told that they would receive $50 if the other investigator could not tell from the MRI data when they were lying.

What did they find? Well, first let me explain the basic logic of the analysis. Simply put, the idea is to take half of the subjects to discover some kind of brain “signature” of lying versus truth, and then to use that signature to classify the other subjects to figure out whether they were lying or telling the truth. More specifically, they found seven activation “blobs” in the brain that produced a stronger response during the lie condition than the truth condition, and they found the three or four of those blobs that were most discriminative between truths and lies. They then used those blobs to classify the activations in the other subjects. So, for example, they ran statistical tests on each 3-D pixel or “voxel” in the brain, asking whether that voxel produced a stronger response during the lie condition than the neutral condition, and they tallied how many voxels showed that pattern versus how many produced a stronger response in the truth condition than neutral. If they found more lie voxels than truth voxels, they considered their model to have identified which condition was a lie in that subject. By this measure, they could correctly determine for 90 percent of subjects which was the lie and which was the truth.

This is not really lie detection. The researchers always know that the subjects are lying in response to one of the sets of non-neutral questions. Rather than answering the question “Can you tell whether the subject is lying,” this research is answering the question “Can you tell which response is the truth and which is the lie?”

STUDY 2

Langleben and colleagues (2005) scanned twenty-six subjects. Prior to the scan, a researcher presented the subjects with an envelope containing two playing cards—the seven of spades and the five of clubs—and a $20 bill. Participants were instructed to deny possession of one of the cards and acknowledge possession of the other card during the scans. They were also told they could keep the $20 if they successfully concealed the identity of the “lie” card. A different researcher then scanned the subjects, telling them to respond to each trial as accurately and truthfully as possible. During the scans the subjects saw, in random order, the five of clubs and seven of spades, and they responded with a button press, indicating whether they held that card in their possession. Of course, one of these card types would be the lie condition, and one would be the truth condition. Other various cards were included as control conditions. Critically, the truth condition was the only response for which the subjects said “yes,” and there were only 24 of these yes-truth trials out of 432 total trials. This feature is important because it means that the subjects are sitting during the scanning saying, “No, no, no, no, no, no,” most of the time, looking for those rare five of clubs so that they can say yes. The subjects probably think of the task essentially as one of detecting that five of clubs, and it means that the neural signature of the supposed “truth” response is really just the neural signature of a target detection event.

When the researchers analyzed their data using group analysis, they found no significantly higher response for the truth condition than for the lie condition, thus failing to replicate their own 2002 study. The probable reason for this failure is that the truth condition was the salient target detection condition, and so the lie condition was like a default case and hence did not activate any part of the brain to a greater extent. Next the researchers lowered the statistical threshold to p < .05 (uncorrected). However, the brain contains 30,000–40,000 voxels, so p < .05 creates a situation in which hundreds or thousands of voxels reach significance, even if what is being analyzed is random noise. Neuroimagers must correct for this “multiple comparisons” problem and generally do not accept the .05 threshold as legitimate without such corrections. Nonetheless, using p < .05 (uncorrected), Langleben and colleagues reported that they found activations in the left inferior frontal gyrus for lie versus truth, commenting that “Lie related activation was less robust and limited to areas associated with context processing, left inferior frontal gyrus” (Langleben et al. 2005). In fact, this “result” was not even worth this effort at interpretation, because it is nowhere near significant.

In addition to the group analysis, Langleben and colleagues also performed an individual subject analysis in which they asked whether they could discriminate lies from truths. They used a classification method based on the data from the group analysis to test new subjects. They found that they could discriminate, on an individual trial basis, which responses were lies and which were truths 76 percent of the time. The “false alarm” rate, which is the rate of true responses incorrectly labeled lies, was 16 percent, and the miss rate, which is the rate of false responses incorrectly labeled truths, was 31 percent. However, because in this experiment the truths were rare target events, the ability to discriminate truths from lies probably just reflects the ability to distinguish rare events; it has nothing to do with lying per se.

In the final paper of the three that look at individual subjects, Davatzikos et al. (2005) analyzed the same data in the Langleben et al. paper (2005). They just used fancier math. There is a lot of exciting work going on right now in computer science and math, where people are devising machine learning algorithms to find patterns in MRI data and other kinds of neuroscience data. So they used some of these fancier methods to classify the responses, and they got an 89 percent correct classification on a subject basis, not a trial basis. But now we have to consider what this means, and whether these lab experiments have anything to do with lie detection as it might be attempted in the real world.

REAL-WORLD IMPLICATIONS

To summarize all three of the individual subject studies, two sets of functional MRI data have been analyzed and used to distinguish lies from truth. Kozel and colleagues (2005) achieved a 90 percent correct response rate in determining which was the lie and which was the truth, when they knew in advance there would be one of each. Langleben got a 76 percent correct response rate with individual trials, and Davatzikos, analyzing the same data, got an 89 percent correct response rate. The very important caveat is that in the last two studies it is not really lies they were looking at, but rather target detection events. Leaving that problem aside, these numbers aren’t terrible. And these classification methods are getting better rapidly. Imaging methods are also getting better rapidly. So who knows where all this will be in a few years. It could get even much better than that.

But there is a much more fundamental question. What does any of this have to do with real-world lie detection? Let’s consider how lie detection in the lab differs from any situation where you might want to use these methods in the real world. The first thing I want to point out is that making a false response when you are instructed to do so isn’t a lie, and it’s not deception. It’s simply doing what you are told. We could call it an “instructed falsehood.” Second, the kind of situation where you can imagine wanting to use MRI for lie detection differs in many respects from the lab paradigms that have been used in the published studies. For one thing, the stakes are incomparably higher. We are not talking about $20 or $50; we are talking about prison, or life, or life in prison. Further, the subject is suspected of a very serious crime, and they believe while they are being scanned that the scan may determine the outcome of their trial. All of this should be expected to produce extreme anxiety. Importantly, it should be expected to produce extreme anxiety whether the subject is guilty or not guilty of the crime. The anxiety does not result from guilt per se, but rather simply from being a suspect. Further, importantly, the subject may not be interested in cooperating, and all of these methods we have been discussing are completely foilable by straightforward countermeasures.

Functional MRI data are useless if the subject is moving more than a few millimeters. Even when we have cooperative subjects trying their best to help us and give us good data, we still throw out one of every five, maybe ten, subjects because they move too much. If they’re not motivated to hold still, it will be much worse. This is not just a matter of moving your head— you can completely mess up the imaging data just by moving your tongue in your mouth, or by closing your eyes and not being able to read the questions. Of course, these things will be detectable, so the experimenter would know that the subject was using countermeasures. But there are also countermeasures subjects could use that would not be detectable, like performing mental arithmetic. You can probably activate all of those putative lie regions just by subtracting seven iteratively in your head.

Because the published results are based on paradigms that share none of the properties of real-world lie detection, those data offer no compelling evidence that fMRI will work for lie detection in the real world. No published evidence shows lie detection with fMRI under anything even remotely resembling a real-world situation. Furthermore, it is not obvious how the use of MRI in lie detection could even be tested under anything resembling a real-world situation. Researchers would need access to a population of subjects accused of serious crimes, including, crucially, some who actually perpetrated the crimes of which they are accused and some who did not. Being suspected but innocent might look a lot like being suspected and guilty in the brain. For a serious test of lie detection, the subject would have to believe the scan data could be used in her case. For the data from individual scans to be of any use in testing the method, the experimenter would ultimately have to know whether the subject of the scan was lying. Finally, the subjects would have to be interested in cooperating. Could such a study ever be ethically conducted?

Before the use of fMRI lie detection can be seriously considered, it must be demonstrated to work in something more like a real-world situation, and those data must be published in peer-reviewed journals and replicated by labs without a financial conflict of interest.

ENDNOTES

REFERENCES

Davatzikos, C., K. Ruparel, Y. Fan, D. G. Shen, M. Acharyya, J. W. Loughead, R. C. Gur, and D. D. Langleben. 2005. Classifying spatial patterns of brain activity with machine learning methods: Application to lie detection. Neuroimage 28:663–668.

Epstein, R., and N. Kanwisher. 1998. A cortical representation of the local visual environment. Nature 392:598–601.

Kanwisher, N., J. McDermott, and M. Chun. 1997. The fusiform face area: A module in human extrastriate cortex specialized for the perception of faces. Journal of Neuroscience 17:4302–4311.

Kozel, F., K. Johnson, Q. Mu, E. Grenesko, S. Laken, and M. George. 2005. Detecting deception using functional magnetic resonance imaging. Biological Psychiatry 58:605–613.

Langleben, D. D., J. W. Loughead, W. B. Bilker, K. Ruparel, A. R. Childress, S. I. Busch, and R. C. Gur. 2005. Telling truth from lie in individual subjects with fast event-related fMRI. Human Brain Mapping 26:262–272.

No Lie MRI. 2006. Product overview. http://www.noliemri.com/products/Overview.htm.

O’Craven, K., and N. Kanwisher. 2000. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. Journal of Cognitive Neuroscience 12:1013–1023.